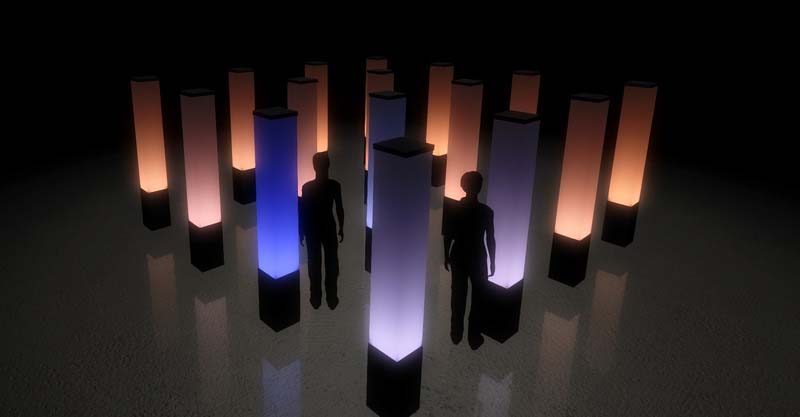

Final Wisdom I is an interactive installation engaging viewers in a sensory exploration of temporal and spatialized poetry. Participants manipulate imagery, sound and language through their gesture, touch and proximity. The work is engaged through a framework of cameras and sensors that react to heat, position, and capacitance – presenting a shifting environment of reactive media and haptics. Final Wisdom I is the work of artists Hans Breder and John Fillwalk, poetry by critic Donald Kuspit, music by composers Carlos Cuellar Brown and Jesse Allison. This project is produced through the Institute for Digital Intermedia Arts at Ball State University in collaboration with the Institute for Digital Fabrication. Final Wisdom I was exhibited at the art gallery of SIGGRAPH 2010 in Los Angeles, CA. Special thanks to IDF/CAP students Matthew Wolak, Christopher Baile and Claire Matucheski, and Assistant Professor of Architecture Joshua Vermillion. http://www.i-m-a-d-e.org/

As an intermedia artist, John Fillwalk actively investigates emerging technologies that inform his work in a variety of media, including video installation, virtual art, and interactive forms. His perspective is rooted in the traditions of painting, cinematography, and sculpture, with a particular interest in spatialized works that can immerse and engage a viewer within an experi- ence. Fillwalk positions his work to act as both a threshold and mediator between tangible and implied space, creating a conduit for the transformative extension of experience, and to pursue the realization of forms, sounds and images that afford interaction at its most fundamental level. In working with technology, he values the synergy of collaboration and regularly works with other artists and scientists on projects that could not be realized otherwise. Electronic media extend the range of traditional processes by establishing a palette of time, motion, interactivity, and extensions of presence. The ephemeral qualities of electronic and intermedia works, by their very nature, are inherently transformative, and the significance of the tangible becomes fleeting, shifting emphasis away from the object and toward the experience.

John Fillwalk is Director of the Institute for Digital Intermedia Arts (IDIA Lab) at Ball State University, an interdisciplinary and collaborative hybrid studio. An intermedia artist and Associate Professor of Electronic Art, Fillwalk investigates media in video installation, hybrid reality and interactive forms. He received his MFA from the University of Iowa in Intermedia and Video Art, and has since received numerous grants, awards, commissions and fellowships.

Donald Kuspit is an art critic, author and professor of art history and philosophy at State University of New York at Stony Brook and lends his editorial expertise to several journals, including Art Criticism, Artforum, New Art Examiner, Sculpture and Centennial Review. Hans Breder was born in Herford, Germany, and trained as a painter in Hamburg, Germany. Attract- ed to the University of Iowa’s School of Art and Art History in 1966, Breder established the Intermedia Program. Carlos Cuellar Brown, a.k.a ccbrown, is a composer, instrumentalist and music producer. Formally trained as a classical pianist, Cuellar specialized in experimental music and intermedia with the late American maverick composer Kenneth Gaburo. Jesse Allison is the Virtual Worlds Research Specialist, IDIA, Assistant Professor of Music Technology, Ball State University. He is also President of Hardware Engineering with Electrotap, LLC, an innovative human-computer interface firm.

Leonardo: The International Society for the Arts, Science and Technology article published by The MIT Press. http://muse.jhu.edu/journals/leonardo/summary/v043/43.4.fillwalk.html

![[un]wired](https://idialab.org/wp-content/uploads/2012/11/unwired900X255_gallery.jpg)