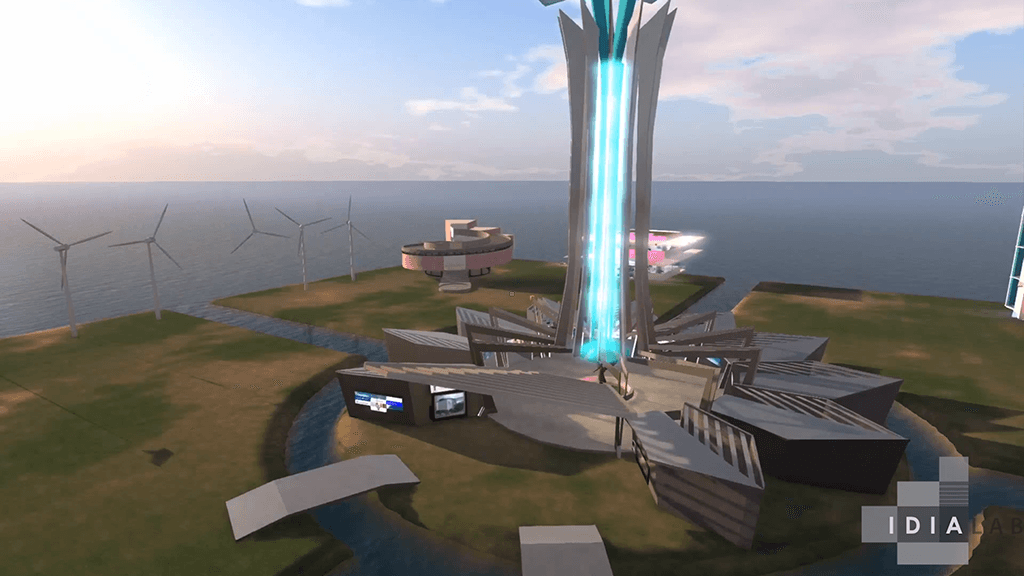

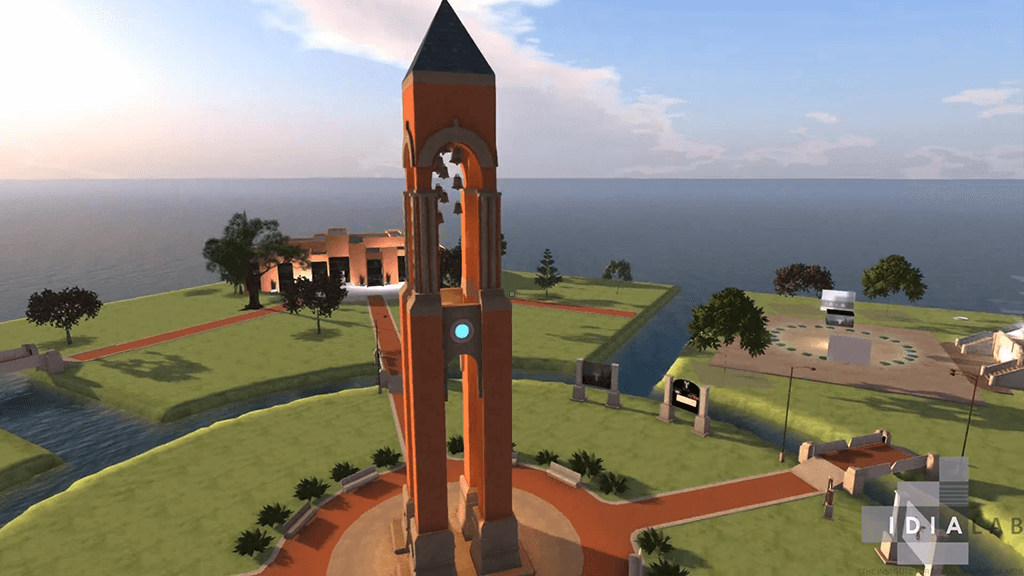

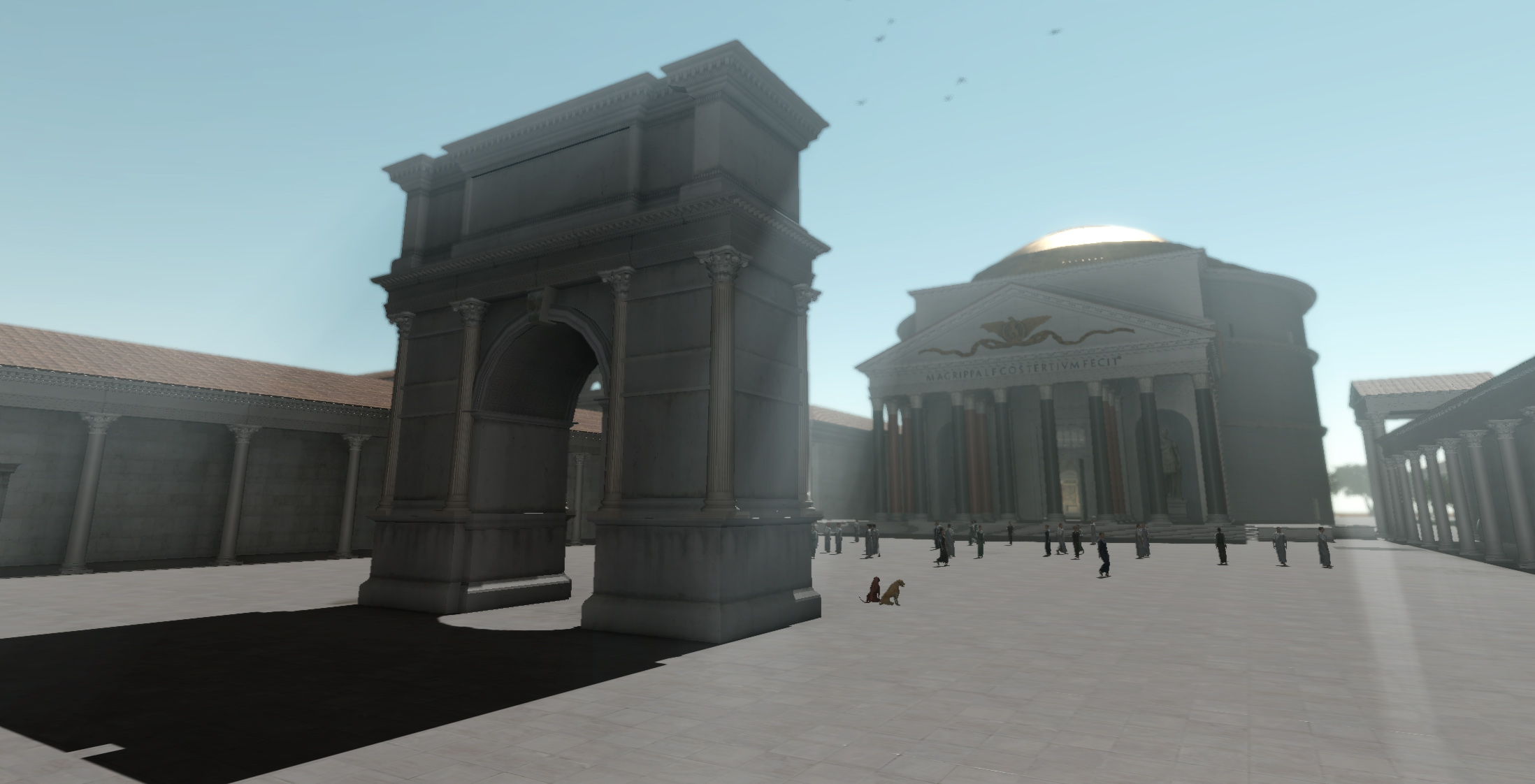

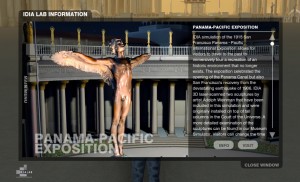

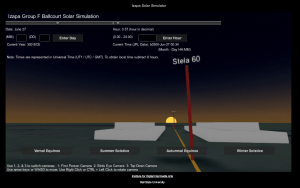

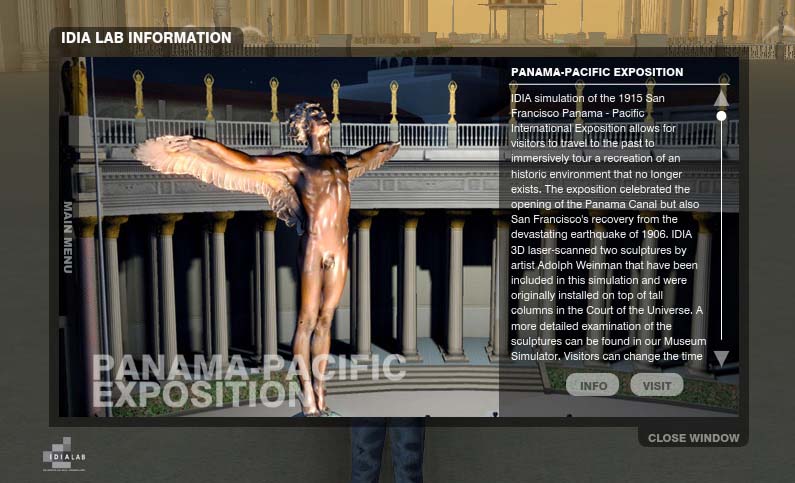

The Pantheon is the best-preserved architectural monument of ancient Rome. This simulation by BSU’s IDIA Lab represents the Pantheon and its surrounds as it may have appeared in 320 AD. Visitors to this Blue Mars / CryEngine simulation can tour the vicinity, learning about the history, function and solar alignments through an interactive heads up display created for this project. The project opened in beta in late 2013 and will premiere publicly in February 2014 and includes new solar simulation software calibrated to the buildings location and year, an interactive HUD, a greeter bot system and a new AI Non Player Character system developed in partnership between IDIA Lab and Avatar Reality.

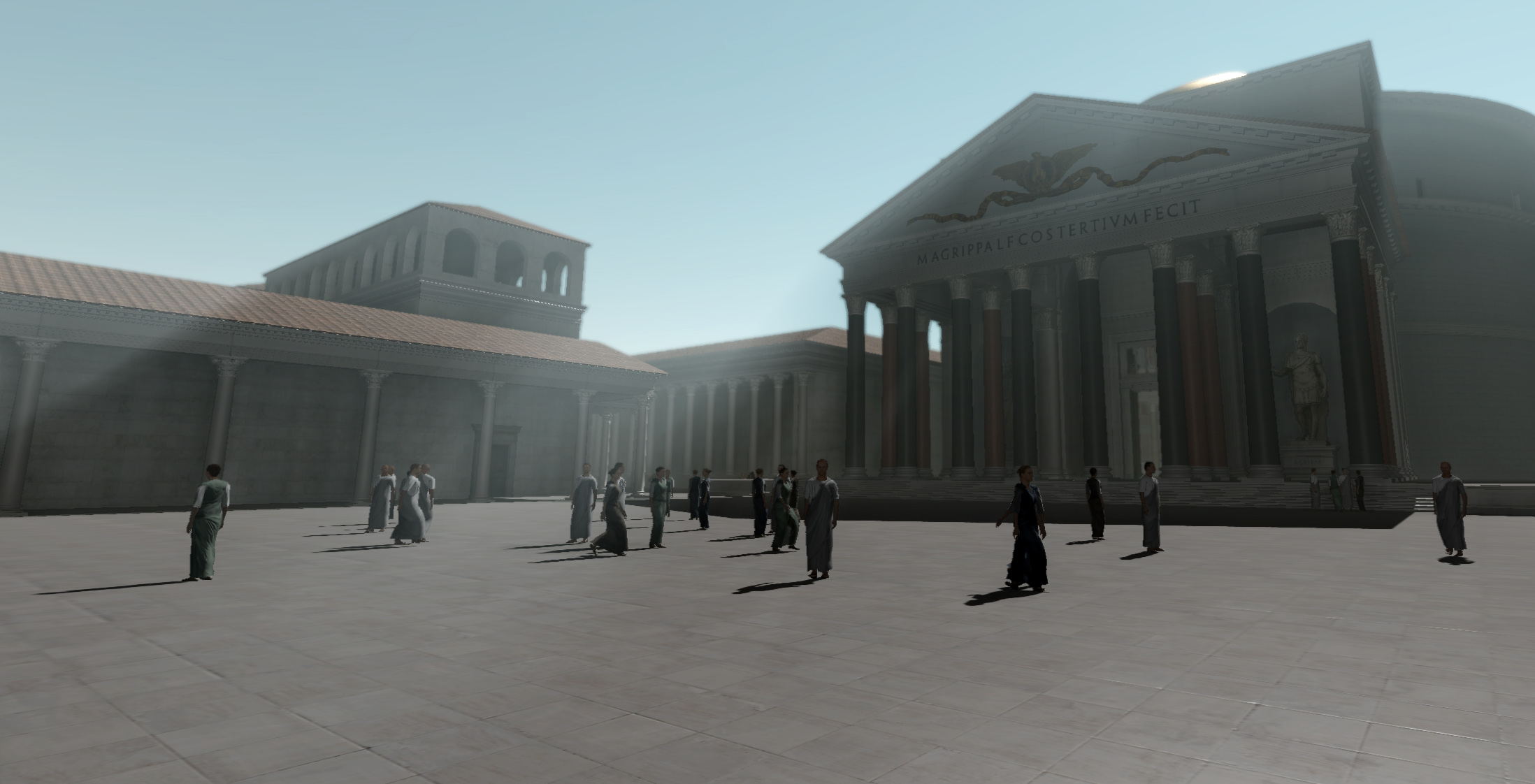

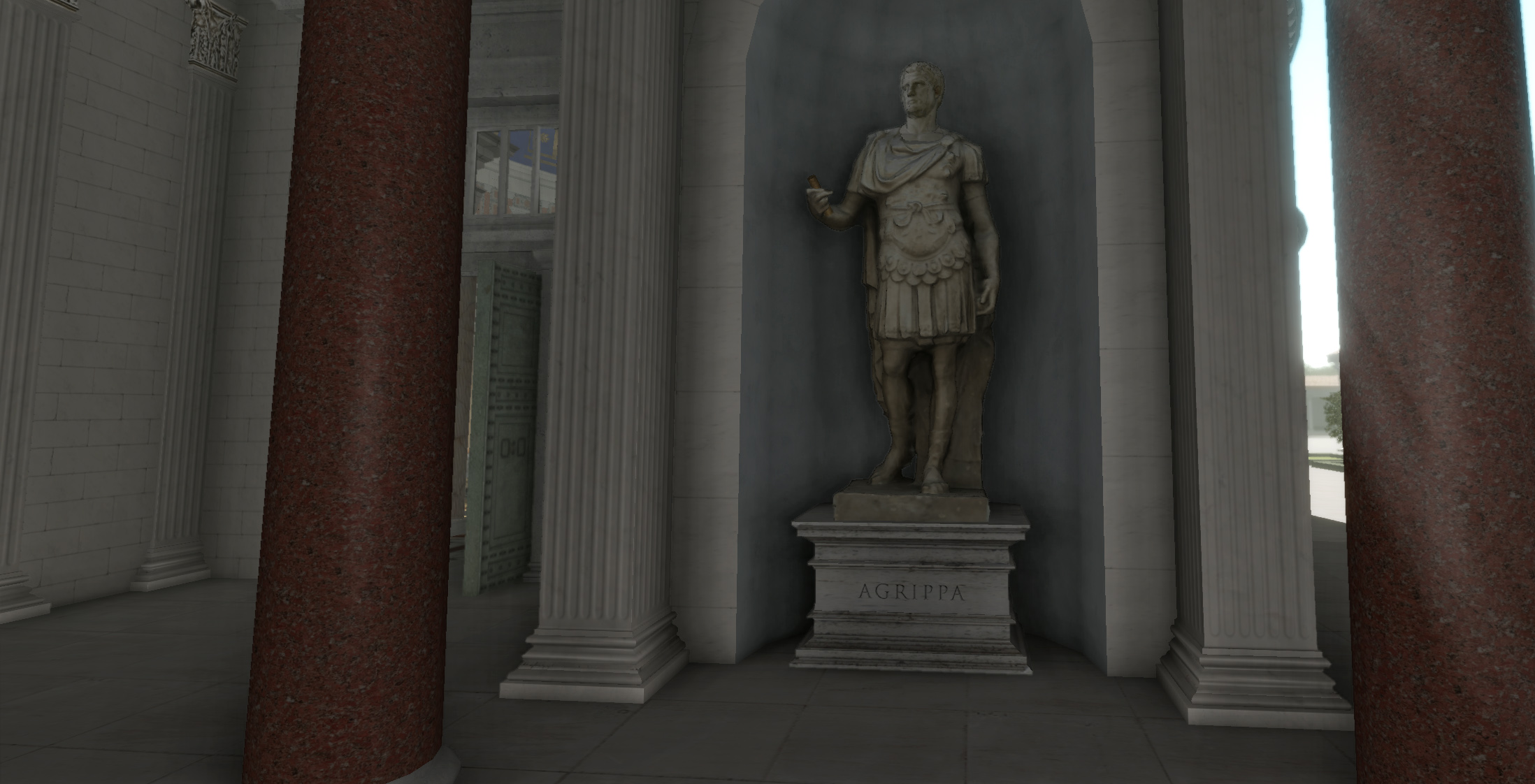

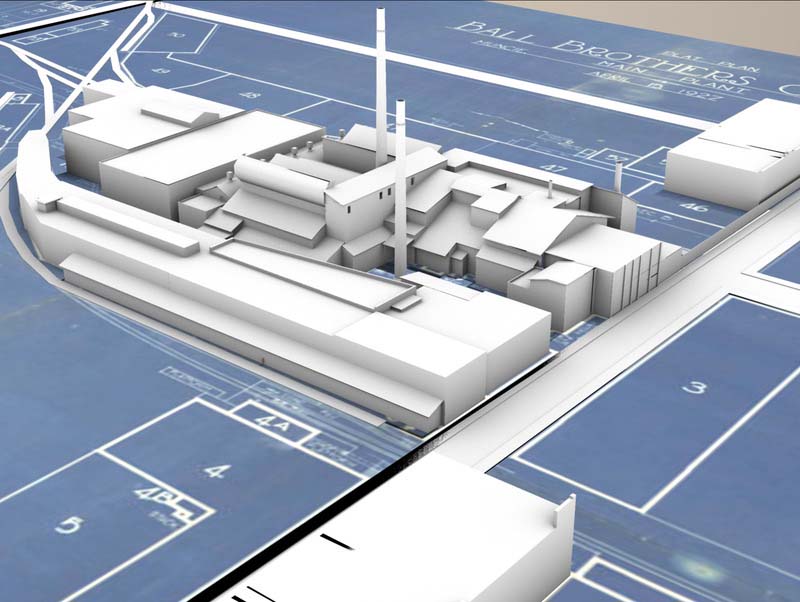

Originally built by Agrippa around 27 BC under the rule of Augustus, it was destroyed by fire, then rebuilt and finally completed in its present form during Emperor Hadrian’s reign, around 128 AD. Agrippa finished the construction of the building and it bears his name above the portico. The Pantheon would have contained numerous marble statues representing the major Roman deities. The statues displayed in this simulation represent a possible configuration and are scanned via photogrammetry. The buildings surrounding the Pantheon are built and interpreted by IDIA based on the large scale model of ancient Rome built by Italo Gismondi between 1935 and 1971. The model resides in the Museo della Civiltà Romana, just outside of Rome, Italy.

Video walkthrough of the Virtual Pantheon in Blue Mars:

To visit the Virtual Pantheon firsthand:

First step, create an account: https://member.bluemars.com/game/WebRegistration.html/

Secondly, download client: http://bluemars.com/BetaClientDownload/ClientDownload.html/

Lastly, visit the Virtual Pantheon: http://blink.bluemars.com/City/IDIA_IDIALabPantheon/

Advisors

The Solar Alignment Simulation of the Roman Pantheon in Blue Mars was developed under consultation with archeo-astronomer Dr. Robert Hannah, Dean of Arts and Social Sciences at the University of Waikato, New Zealand, one of the world’s foremost scholars on Pantheon solar alignments; and archaeologist Dr. Bernard Frischer, Indiana University.

Background

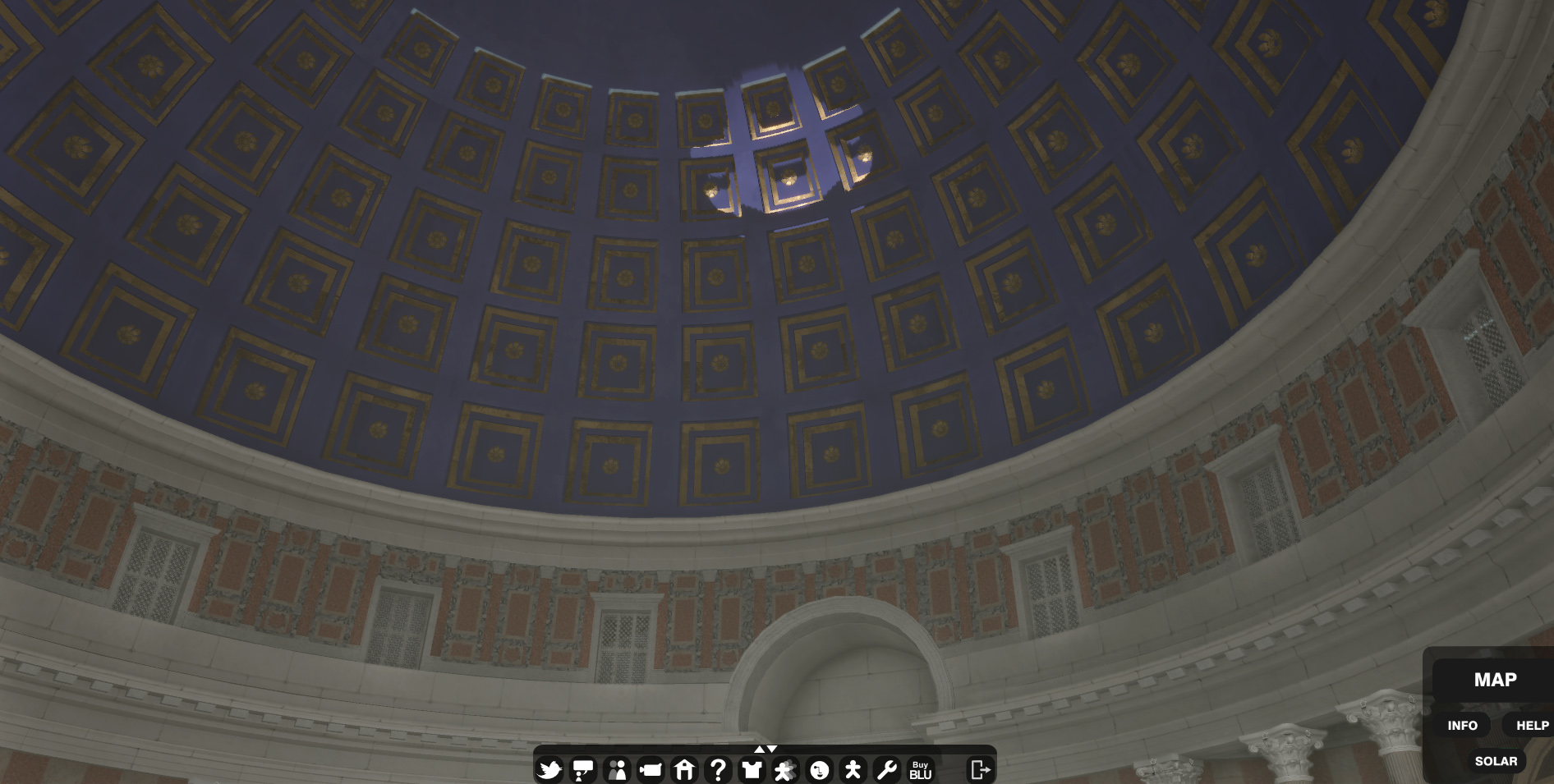

The Pantheon that we can visit today is composed of a rectangular porch with three rows of granite columns in front of a circular building designed as a huge hemispherical dome (142 feet in diameter), built over a cylinder of the same diameter and as high as the radius. Therefore, the ideal completion of the upper hemisphere by a hypothetical lower one touches the central point of the floor, directly under the unique source of natural light of the building. This light source is the so-called oculus, a circular opening over 27 feet wide on the top of the cupola. It is the only source of direct light since no direct sunlight can enter from the door in the course of the whole year, owing to the northward orientation of the entrance doorway. Of the original embellishments the building should have had, the coffered ceiling, part of the marble interiors, the bronze grille over the entrance and the great bronze doors have survived.

Interior Wall

The interior wall, although circular in plan, is organized into sixteen regularly spaced sectors: the northernmost one contains the entrance door, and then (proceeding in a clockwise direction) pedimented niches and columned recesses alternate with each other. Corresponding to this ground level sector are fourteen blind windows in the upper, attic course, just below the offset between the cylinder and the dome. It is likely that both the niches and the windows were meant for statues, which, however, have not survived.

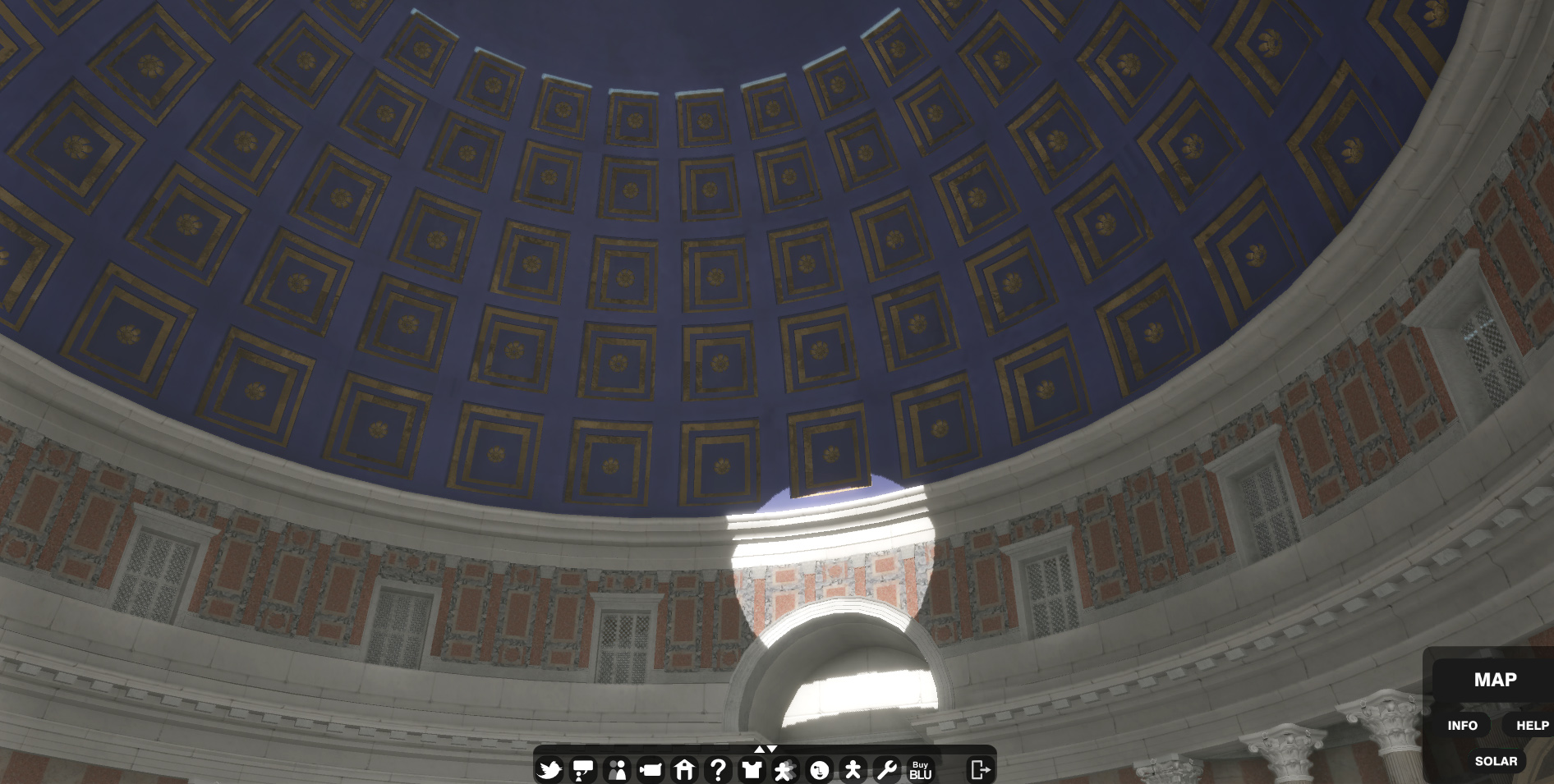

Oculus

Direct sunlight penetrates the interior only through a large, 27 foot wide oculus in the center of the domed roof. Otherwise indirect sunlight can enter the building, but only through the large, north-facing doorway, when it is open. The fall of direct sunlight through the oculus into the essentially spherical building leads to the comparison with a roofed sundial.

Celestial Alignments

A columned porch leads through a vestibule of the Pantheon, into a huge, shadowy interior, over 142 feet in height and as much in diameter. The building’s form is essentially that of a sphere with its lower half transformed into a cylinder of the same radius. Direct sunlight penetrates the interior only through a large, 27 feet wide oculus in the centre of the domed roof.

The shift from one semester to the other is marked by the passage of the sun at the equinoxes in March and September. At this point the noontime sun shines partially just below the dome, passing through the grill over the entrance doorway and falling on the floor of the porch outside. More significantly, however, the centre of this equinoctial, midday circle of sunlight lies on the interior architectural moulding, which marks the base of the dome.

On April 21st, the midday sun shines directly on to visitors to the Pantheon when they are standing in the open doorway, dramatically highlighting them. This day is of particular significance, not just because this was when the sun entered Taurus, but more because it is the traditional Birthday of Rome, a festival preserved from antiquity right through to the present day. And it may be that when the building was officially commissioned in AD 128, the person expected to be standing in the open doorway was the emperor Hadrian himself.

The illustration indicates a section through the Pantheon, showing the fall of the noon sunlight at the winter solstice, when the sun is at altitude 24 degrees; noon sunlight, at both equinoxes at altitude 48 degrees; noon sunlight on April 21st, when the sun is at altitude 60 degrees; and finally, noon sunlight at the summer solstice, when the sun is at altitude 72 degrees.

Meaning of Pantheon

The Pantheon is a building in Rome, Italy commissioned by Marcus Agrippa during the reign of Augustus as a temple to all of the gods of ancient Rome, and rebuilt by the emperor Hadrian about 126 AD.

Pantheon is an ancient Greek composite word meaning All Gods. Cassius Dio, a Roman senator who wrote in Greek, speculated that the name comes either from the statues of so many gods placed around this building, or from the resemblance of the dome to the heavens.

“Agrippa finished the construction of the building called the Pantheon. It has this name, perhaps because it received among the images which decorated it the statues of many gods, including Mars and Venus; but my own opinion of the name is that, because of its vaulted roof, it resembles the heavens.”

-Cassius Dio History of Rome 53.27.2

Augustus

Augustus was the founder of the Roman Empire and its first Emperor, ruling from 27 BC until his death in 14 AD.

The reign of Augustus initiated an era of relative peace known as the Pax Romana (The Roman Peace). Despite continuous wars or imperial expansion on the Empire’s frontiers and one year-long civil war over the imperial succession, the Roman world was largely free from large-scale conflict for more than two centuries. Augustus dramatically enlarged the Empire, annexing Egypt, Dalmatia, Pannonia, Noricum, and Raetia, expanded possessions in Africa, expanded into Germania, and completed the conquest of Hispania.

Beyond the frontiers, he secured the Empire with a buffer region of client states, and made peace with the Parthian Empire through diplomacy. He reformed the Roman system of taxation, developed networks of roads with an official courier system, established a standing army, established the Praetorian Guard, created official police and fire-fighting services for Rome, and rebuilt much of the city during his reign.

Augustus died in 14 AD at the age of 75. He may have died from natural causes, although there were unconfirmed rumors that his wife Livia poisoned him. He was succeeded as Emperor by his adopted son (also stepson and former son-in-law), Tiberius.

Argrippa

Marcus Vipsanius Agrippa (c. 23 October or November 64/63 BC – 12 BC) was a Roman statesman and general. He was a close friend, son-in-law, lieutenant and defense minister to Octavian, the future Emperor Caesar Augustus and father-in-law of the Emperor Tiberius, maternal grandfather of the Emperor Caligula, and maternal great-grandfather of the Emperor Nero. He was responsible for most of Octavian’s military victories, most notably winning the naval Battle of Actium against the forces of Mark Antony and Cleopatra VII of Egypt.

In commemoration of the Battle of Actium, Agrippa built and dedicated the building that served as the Roman Pantheon before its destruction in 80 AD. Emperor Hadrian used Agrippa’s design to build his own Pantheon, which survives in Rome. The inscription of the later building, which was built around 125, preserves the text of the inscription from Agrippa’s building during his third consulship. The years following his third consulship, Agrippa spent in Gaul, reforming the provincial administration and taxation system, along with building an effective road system and aqueducts.

Arch of Piety

The Arch of Piety is believed to have stood in the piazza to the immediate north of the Pantheon. Statements made in mediaeval documents imply, but do not specifically say, that the scene of Trajan and the widow was represented in a bas-relief on the Arch – narrating the story of the emperor and a widow, suppressing the emperor’s name. His probable source, the mediaeval guidebook of Rome known as Mirabilia Romae, does not even state that the arch was built in commemoration of the event. It mentions the arch and then says that the Incident happened there.

Giacomo Boni discusses the legend of Trajan, giving many interesting pictures which show how the story was used in medieval painting and sculpture. He has found a bas-relief on the Arch of Constantino, which he thinks may have given rise to the story. It shows a woman sitting, her right hand raised in supplication to a Roman figure, who is surrounded by other men, some in military dress, and two accompanied by horses. Boni suggests that the Middle Ages may have supposed this figure to be Trajan because of his reputation for justice.

Saepta Julia

The Saepta Julia was a building in Ancient Rome where citizens gathered to cast votes. The building was conceived by Julius Caesar and dedicated by Marcus Vipsanius Agrippa in 26 BC. The building was originally built as a place for the comitia tributa to gather to cast votes. It replaced an older structure, called the Ovile, which served the same function. The building did not always retain its original function. It was used for gladiatorial fights by Augustus and later as a market place.

The conception of the Saepta Julia began during the reign of Julius Caesar (died 44 BC). Located in the Campus Martius, the Saepta Julia was built of marble and surrounded a huge rectangular space next to the Pantheon. The building was planned by Julius Caesar who wanted it to be built of marble and have a mile long portico according to a letter written by Cicero to his friend Atticus about the building project. The quadriporticus (four-sided portico, like the one used for the enclosure of the Saepta Julia) was an architectural feature made popular by Caesar.

After Caesar’s assassination in 44 BC, and in the backlash of public support for the former ruler, men continued to work on projects that Caesar had set into motion. Marcus Aemilius Lepidus, who used to support Caesar and subsequently aligned with his successor Octavian, took on the continuation of the Saepta Julia building project. The building was finally completed and dedicated by Marcus Vipsanius Agrippa in 26 BC. Agrippa also decorated the building with marble tablets and Greek paintings.

The Saepta Julia can be seen on the Forma Urbis Romae, a map of the city of Rome as it existed in the early 3rd century AD. Part of the original wall of the Saepta Julia can still be seen right next to the Pantheon.

– edited from Robert Hannah, “The Pantheon as Timekeeper”, 2009.

________

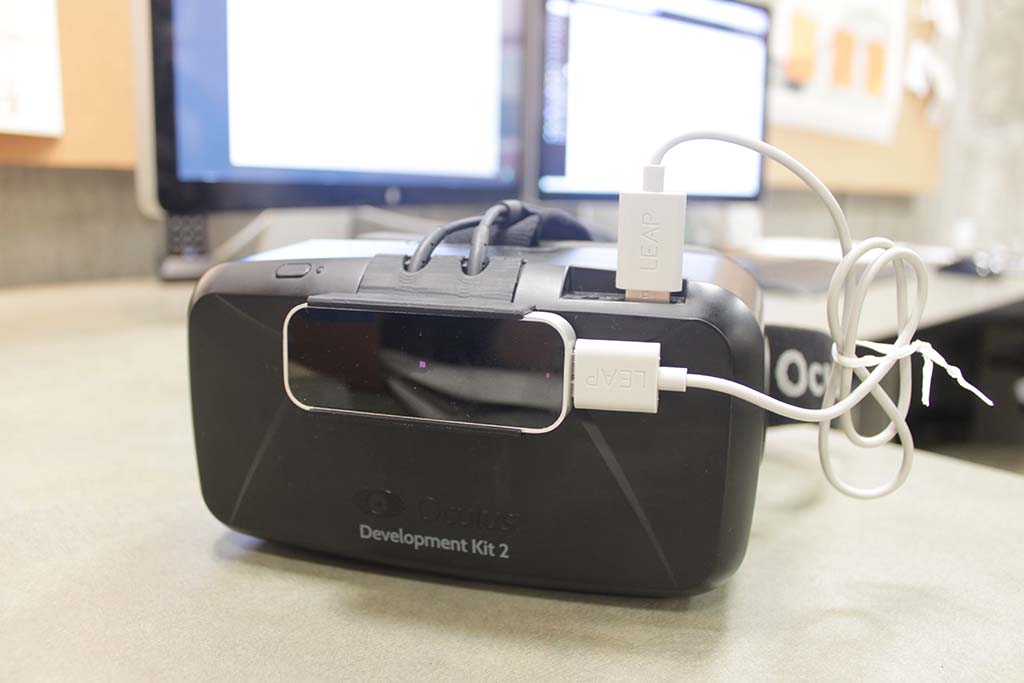

Simulation by the Institute for Digital Intermedia Arts at Ball State University

Project Director: John Fillwalk, Senior Directory IDIA Lab, BSU.

IDIA Staff: Neil Zehr, Trevor Danehy, David Rodriguez, Ina Marie Henning, Adam Kobitz

PROJECT ADVISORS:

Dr. Robert Hannah, University of Waikato, New Zealand

Dr. Bernard Frischer, Virtual World Heritage Laboratory, Indiana University, USA

SPECIAL THANKS:

Shinichi Soeda, Avatar Reality

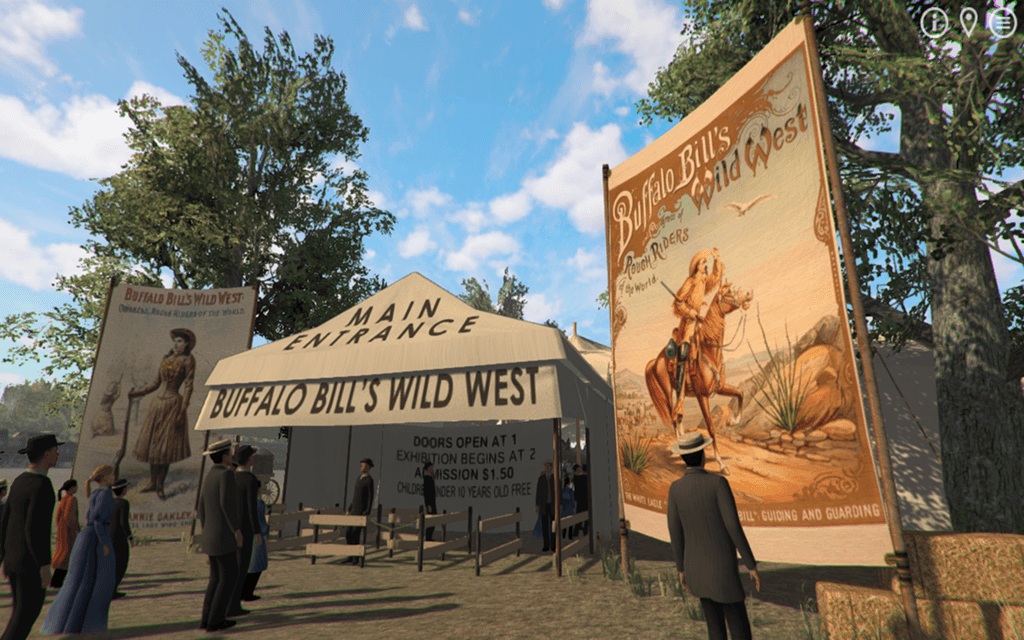

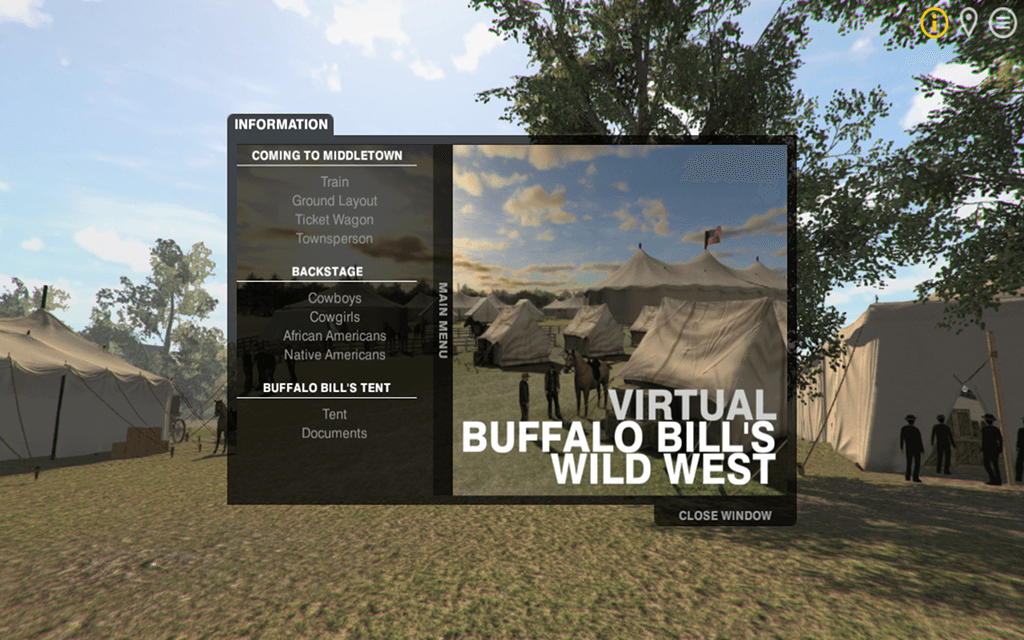

Born in 1846, William F. Cody rode for the Pony Express, served as a military scout and earned his moniker “Buffalo Bill” while hunting the animals for the Kansas Pacific Railroad work crews. Beginning in 1883, he became one of the world’s best showmen with the launch of Buffalo Bill’s Wild West, which was staged for 30 years, touring America and Europe multiple times.

Born in 1846, William F. Cody rode for the Pony Express, served as a military scout and earned his moniker “Buffalo Bill” while hunting the animals for the Kansas Pacific Railroad work crews. Beginning in 1883, he became one of the world’s best showmen with the launch of Buffalo Bill’s Wild West, which was staged for 30 years, touring America and Europe multiple times.

![[un]wired](https://idialab.org/wp-content/uploads/2012/11/unwired900X255_gallery.jpg)