For the season finale of its popular program “The Universe,” the History Channel is shining a spotlight on the work of virtual artists from Ball State University.

The season finale episode of the documentary series, which aired May 23, explores how Roman emperors built ancient structures to align with movements of the sun. To confirm experts’ theories about the religious, political, and cultural significance behind these phenomena, the cable network enlisted the help of Ball State’s Institute for Digital Intermedia Arts (IDIA).

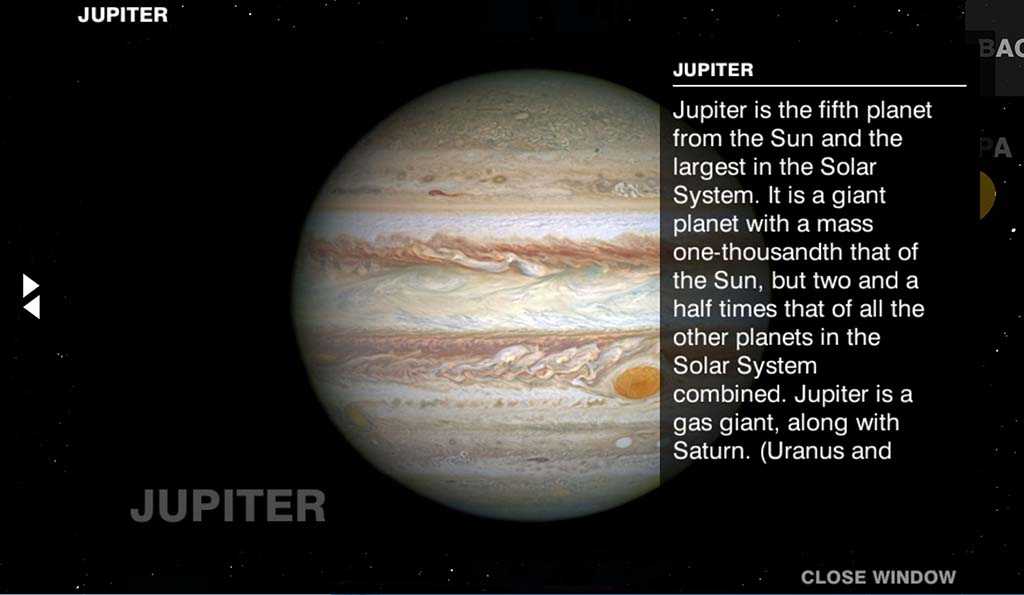

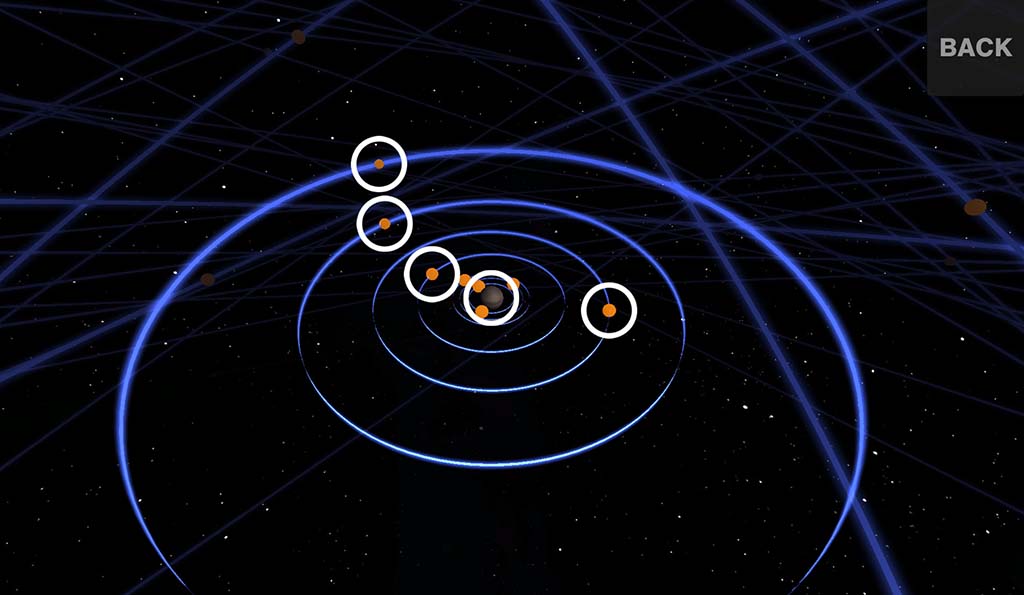

Through the use of 3-D computer animation, artists and designers from the IDIA Lab virtually recreated several monuments featured in the episode, along with accurately simulating the alignment of the sun. These structures include: the Pantheon, one of the best preserved buildings of ancient Rome; the Temple of Antinous, a complex that today lies in ruins within Hadrian’s Villa outside of Rome; and the Meridian of Augustus, a site containing an Egyptian obelisk brought to Rome for use as a giant sundial and calendar.

‘Getting things right’

|

James Grant Goldin, a writer and director for The History Channel, says IDIA’s animations were an essential part of the program’s second season finale. An earlier episode of “The Universe,” which aired in 2014, contracted with IDIA for animation of Stonehenge in a segment demonstrating how the prehistoric monument may have been used to track celestial movements.

This time around, the work of Ball State digital artists is featured throughout the Roman engineering episode.

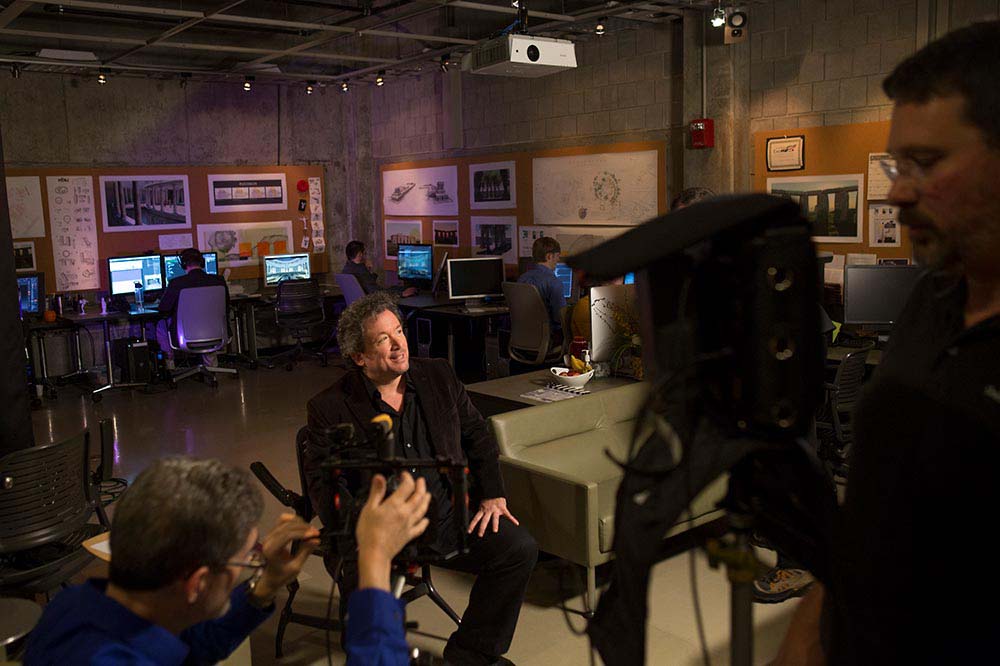

“It’s an honor for us,” says John Fillwalk, director of IDIA and senior director of the university’s Hybrid Design Technologies initiative. “Ideally our relationship with the History Channel will continue long into the future.”

Goldin said the cable network is keen on employing the IDIA for future projects because Fillwalk and his team create beautifully rendered graphics backed by data and research.

“John was devoted to getting things right,” he said. ” Many of the theories we discuss in the show remain controversial, and in nonfiction TV it’s always a good idea to present such things with a qualifying phrase in the narration—an ‘if’ or a ‘maybe.’ But the IDIA Lab combined their own research with that of experts, and I’m very happy with the results.”

Gaming software transforms history

|

Fillwalk has worked closely over the years with many prominent scholars of the ancient world, including Bernard Frischer, a Roman archeologist at Indiana University and Robert Hannah, dean of arts and social sciences from New Zealand’s University of Waikat, who advised on the archeo-astronomy of the project.

Hannah says he’s been astounded to see the way up-to-the-minute gaming software can bring to life the work of today’s historians and virtual archaeologists. “I’ve seen my son play games like ‘Halo,’ so I knew what was possible,” he said, “but I’d never seen it adapted to ancient world buildings.”

Phil Repp, Ball State’s vice president for information technology, says the relationship between the university and the cable network is a key example of how Ball State is distinguishing itself as leading world provider of emerging media content.

“For the History Channel to want the continued help of John and his staff speaks to the quality of what our lab can produce,” he said.

Goldin’s praise for the IDIA supports Repp’s theory. “Bringing the past to life is a very challenging task,” he said. “The renderings Ball State artists have created represent the most accurate possible picture of something that happened almost 2,000 years ago.”