IDIA Lab was invited to exhibit their virtual and hybrid artworks at the Third Art and Science International Exhibition at the China Science and Technology Museum in Beijing, China.

IDIA Lab was invited to exhibit their virtual and hybrid artworks at the Third Art and Science International Exhibition at the China Science and Technology Museum in Beijing, China.

http://www.idialabprojects.org/displacedresonance/

Displaced Resonance v2

John Fillwalk

Michael Pounds

IDIA Lab, Ball State University

Interactive installation, mixed media

2012

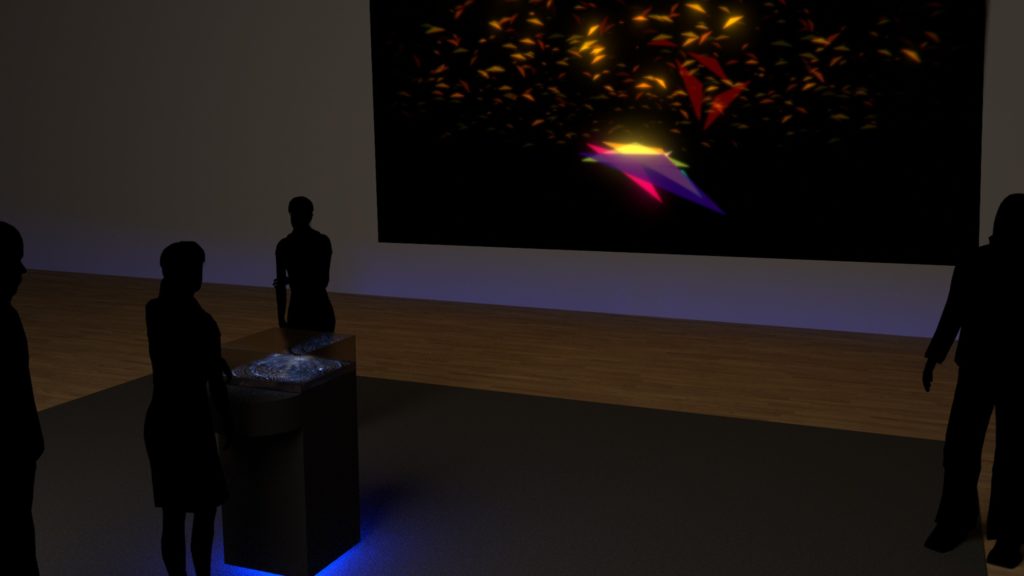

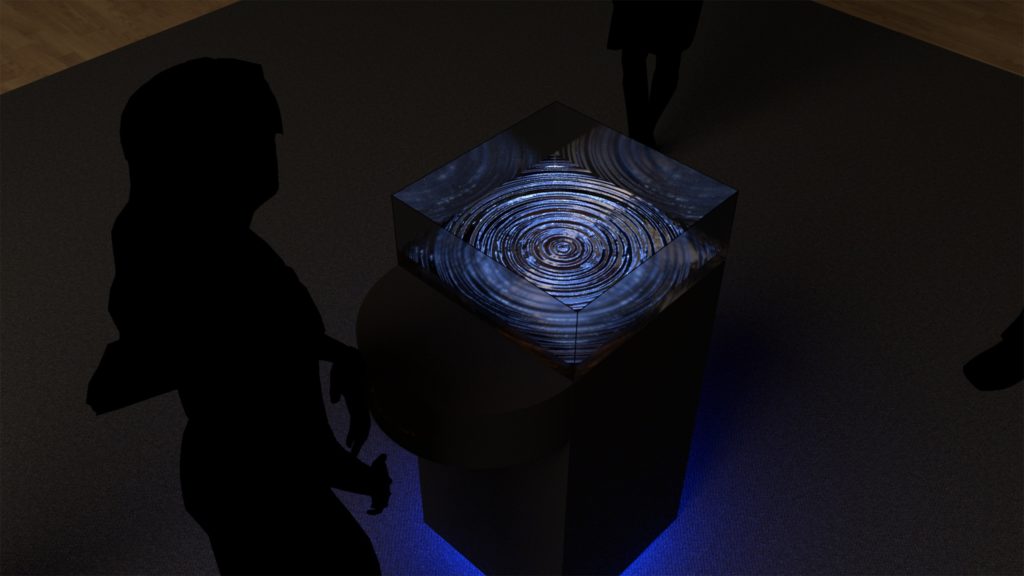

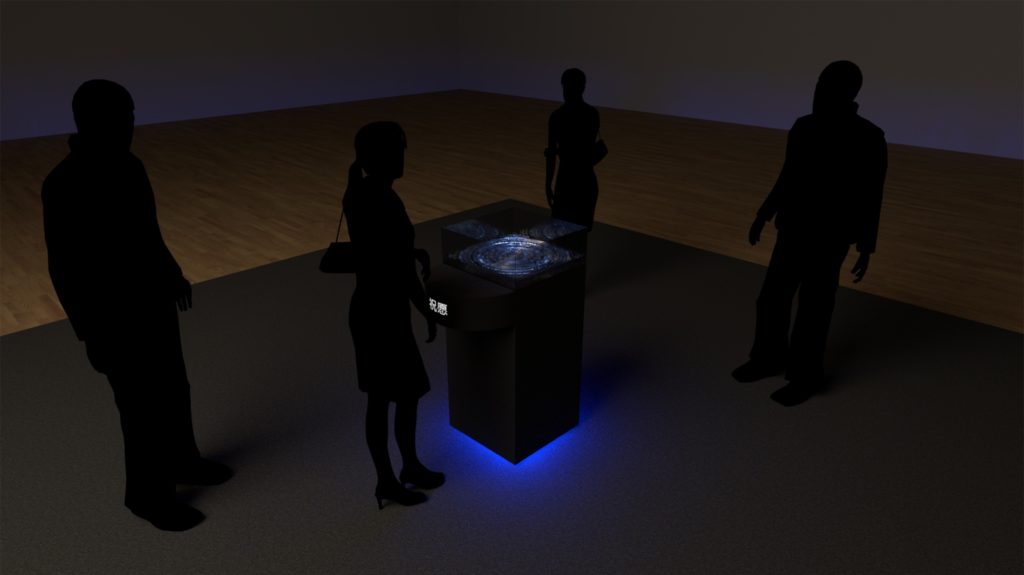

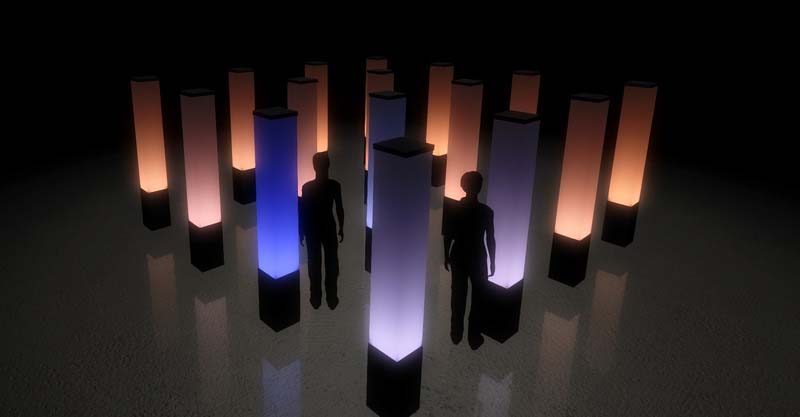

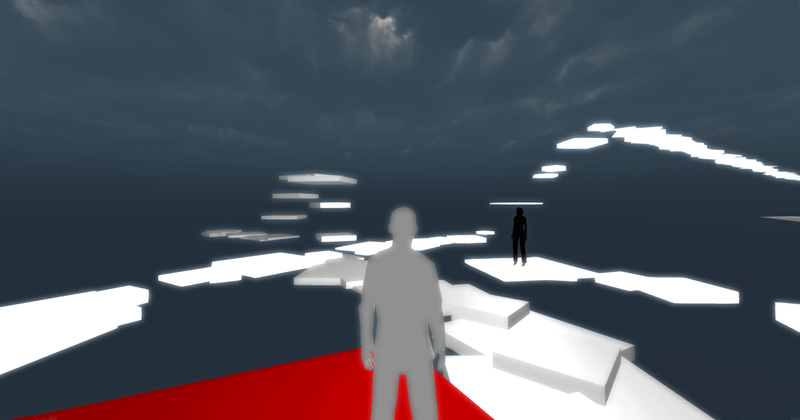

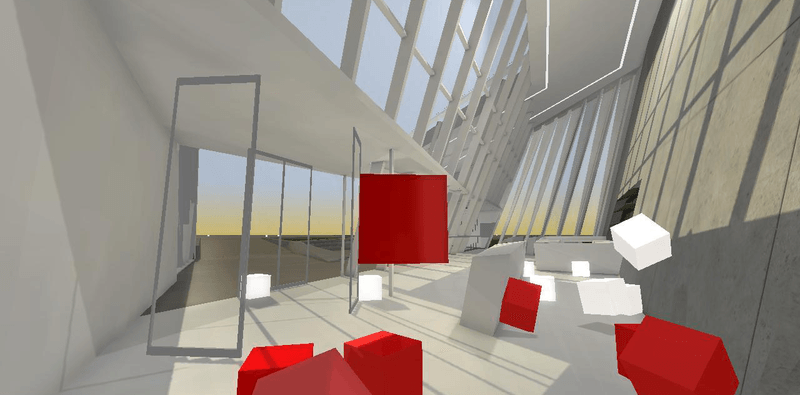

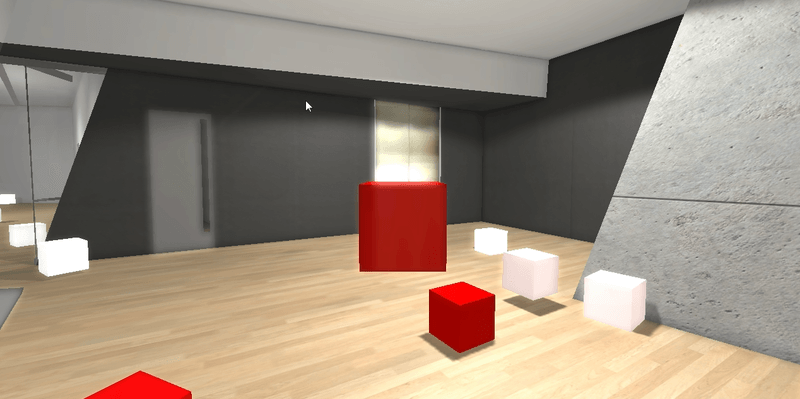

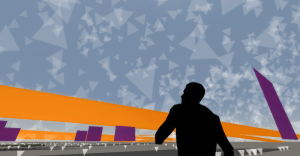

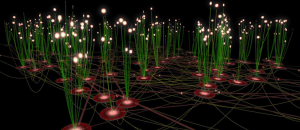

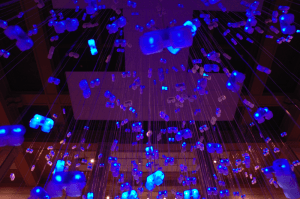

Displaced Resonance v2 is an interactive installation consisting of sixteen reactive forms that are networked in a grid of light and sound. Interaction within the sculptural field is based on a participant’s presence and proximity to each sculpture. The Displaced Resonance installation is connected to a mirrored instance of the field in a virtual environment – bridging both physical and virtual visitors within a shared hybrid space. Visitors to the virtual space are represented by avatars and through their proximity affect the light and sound of each sculpture. Each participant is aware of the other in each space and uniting both instances within a singular hybrid environment.

A computer system using an thermal camera tracks the movement of visitors and responds by controlling the distribution of sound and dynamic RGB data to the LED lights within the sculptural forms. The installation utilizes custom live processing software to transform these sources through the participants’ interaction – displacing the interaction from both their physical and virtual contexts to the processed framework of physical and virtual resonances. The two environments are linked to send and receive active responses from both sides of the installation via messaging, sensors, hardware and scripting.

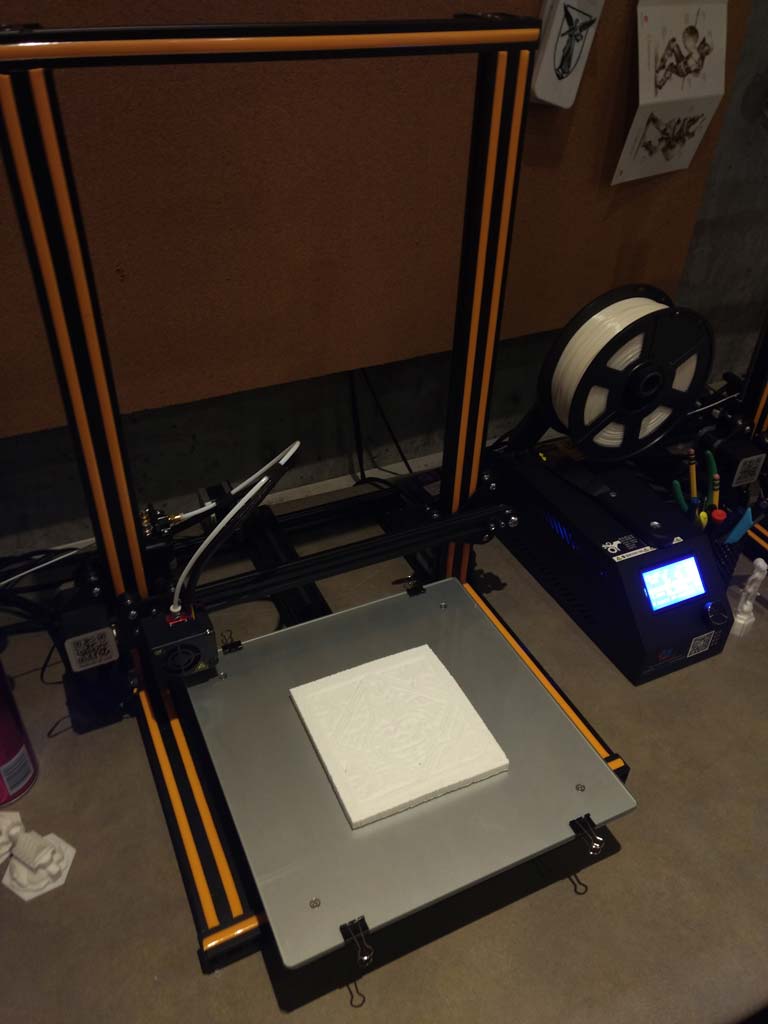

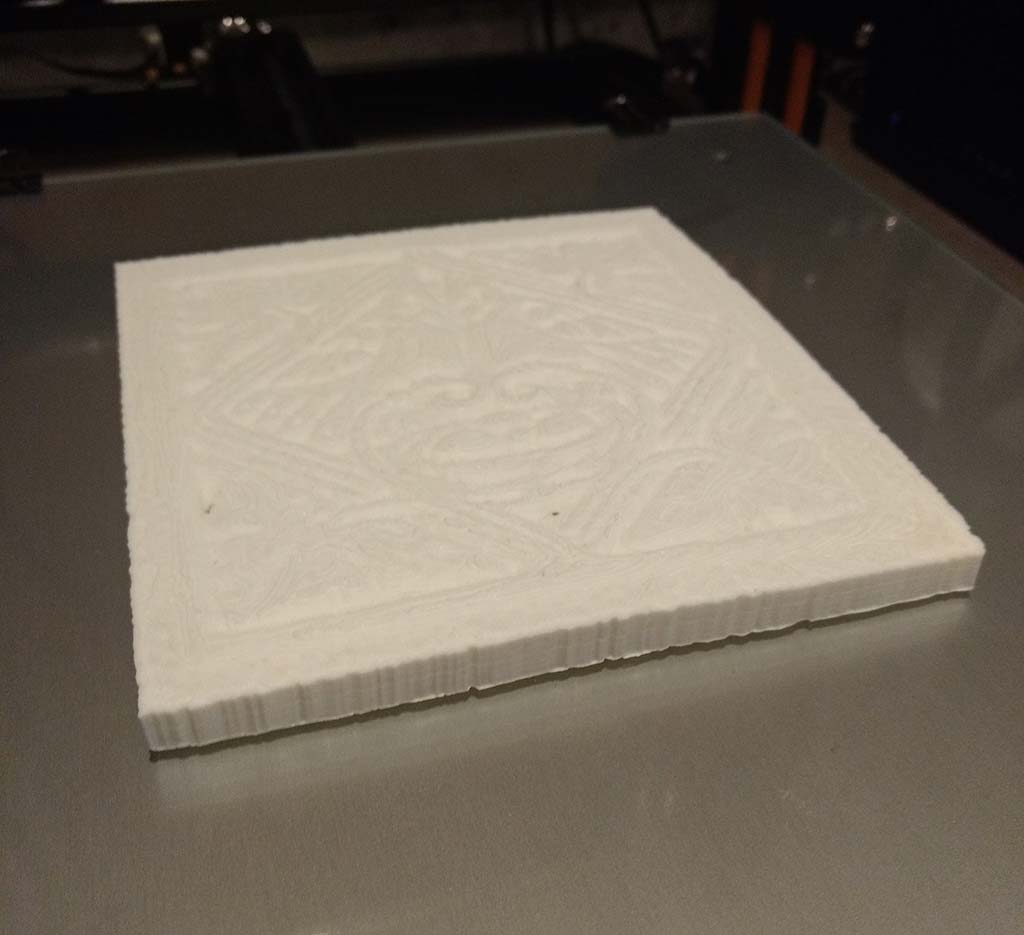

In prototyping this work, the process included both physical and virtual models to design and build the form and the interaction. The physical prototypes were interpreted in a virtual simulation environment, investigating the spatial interaction of the structure. The interactive functionality was tested through scripting before the form was brought into the sensored camera-based version. After several virtual iterations, the form was re-interpreted and fabricated.

John Fillwalk

with Michael Pounds, David Rodriguez, Neil Zehr, Chris Harrison, Blake Boucher, Matthew Wolak, and Jesse Allison.

Third Art and Science International Exhibition

China Science and Technology Museum in Beijing

http://www.tasie.org.cn/index.asp

TASIE Press release

http://www.tasie.org.cn/content_e.asp?id=84

Ball State artists create “forest” of light on display in China and Internet

Muncie, Ind. — Ball State University electronic artists have created a “forest” of light and sound that will be on exhibit in Beijing, China through November, yet also accessible to visitors from Indiana or anywhere else in the world.

That’s possible because “Displaced Resonance,” as the interactive art exhibit is known, has both real-life and virtual components.

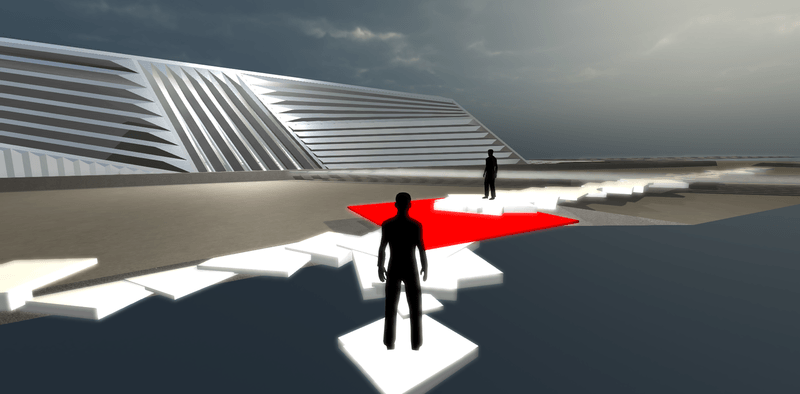

The physical portion has been installed in a gallery of the China Science and Technology Museum in Beijing. There, in-person visitors can negotiate a thicket of 16 interactive sculptures spaced 1.5 meters apart that will change colors and emit music as they approach.

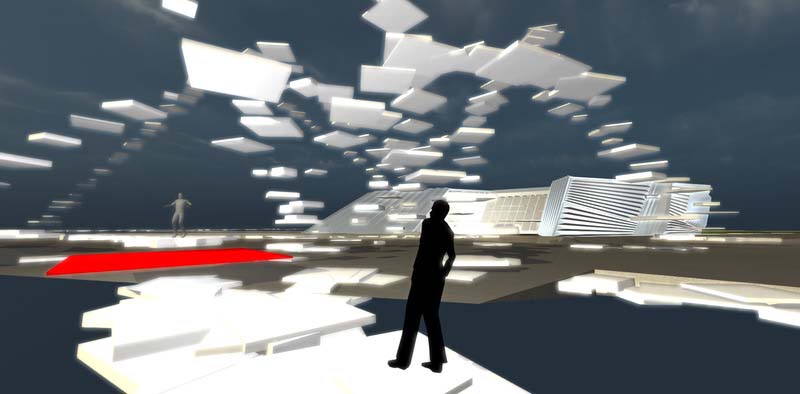

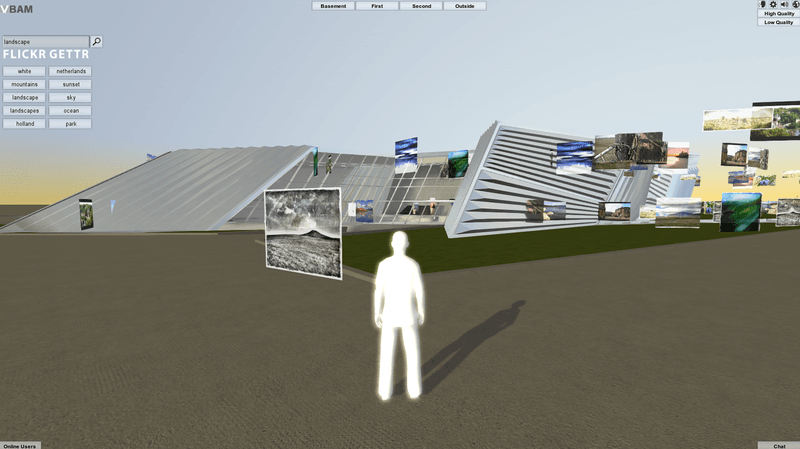

A digital replica of the layout, meanwhile, resides on the Internet, accessible through the museum’s website. Online visitors can wander the virtual exhibit using an avatar, and the digital pillars will change colors and produce sounds, just like their physical counterparts.

But that’s not all — the two pieces interact with each other, says John Fillwalk, director of Ball State’s Institute for Digital Intermedia Arts (IDIA) and Hybrid Design Technologies (HDT), which created the work in collaboration with IDIA staff, students and composer Michael Pounds, BSU.

When an online avatar approaches a virtual pillar, the corresponding real-life column also will change colors, and vice versa. In-person and virtual visitors will produce different colors, however, allowing them to track each other through the exhibit.

“It’s what we call hybrid art,” says Fillwalk. “It’s negotiating between the physical world and the virtual. So it’s both sets of realities, and there’s a connection between the two.”

The physical pillars are two meters (or more than 6 feet, 6 inches) tall. They consist of a wooden base containing a sound system; a translucent pillar made of white corrugated plastic and computer-controlled lighting.

A thermal camera mounted on the museum’s ceiling keeps track of visitors and feeds its data to a computer program that directs the columns to change color and broadcast sounds when someone draws near.

“It’s a sensory forest that you can navigate,” Fillwalk says.

A video screen mounted on a wall overlooking the exhibit allows museum visitors to watch avatars move around the virtual version, while Internet patrons can affect the physical counterpart.

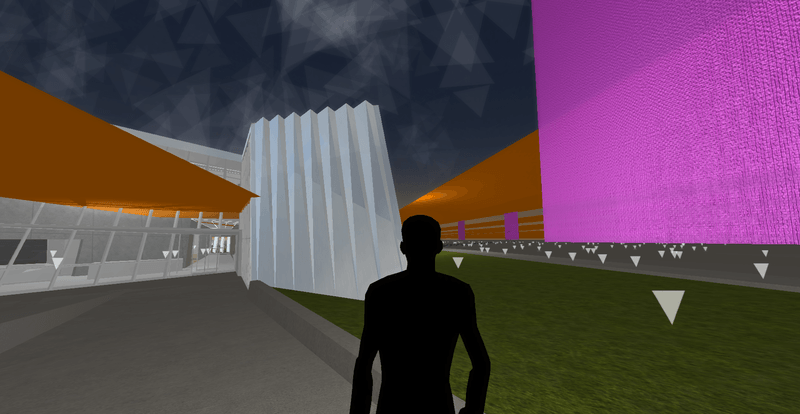

“Displaced Resonance” is the centerpiece of Ball State’s contributions to the Beijing museum’s 3rd Art and Science International Exhibition and Symposium, a month-long celebration of technology and the arts. Ball State was invited to participate because museum curators discovered some of IDIA’s work and liked what they saw, Fillwalk said.

In addition to “Displaced Resonance,” IDIA contributed four other pieces of digital art that museum visitors can view at a kiosk.

Those pieces are:

· “Proxy”, in which visitors create, color and sculpt with floating 3D pixels.

· “Flickr Gettr,” in which visitors can surround themselves with photos from the Flickr web service that correspond to search terms they submit.

· “Confluence,” in which users create virtual sculptures by moving around the screen and leaving a path in their wake.

· “Survey for Beijing,” in which real time weather data from Beijing is dynamically visualized in a virtual environment.

(Note to editors: For more information, contact John Fillwalk, director of the Institute for Digital Intermedia Arts, at765-285-1045 or jfillwalk@bsu.edu; or Vic Caleca, media relations manager, at 765-285-5948, or vjcaleca@bsu.edu. For more stories, visit the Ball State University News Center at www.bsu.edu/news).

![[un]wired](https://idialab.org/wp-content/uploads/2012/11/unwired900X255_gallery.jpg)