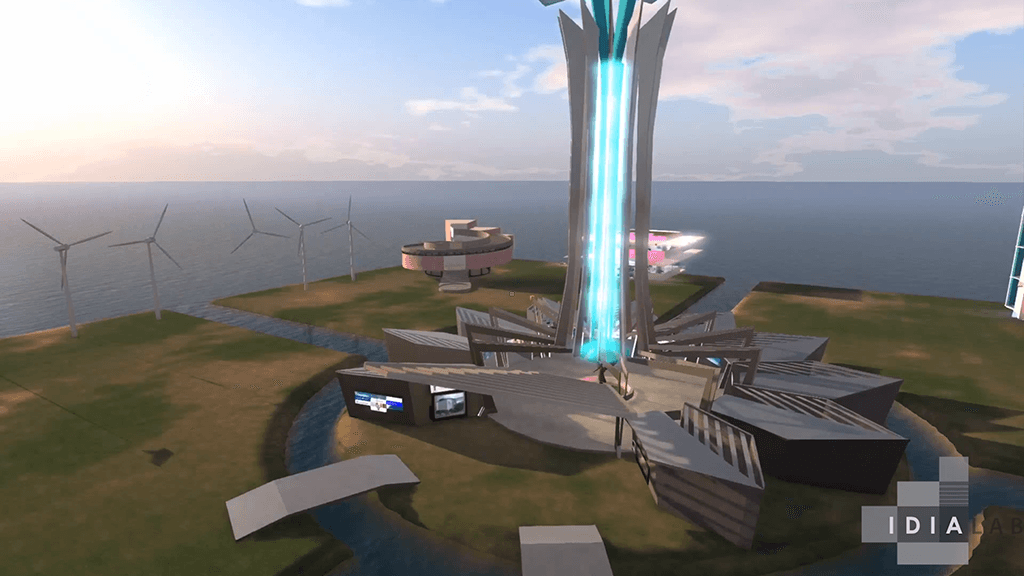

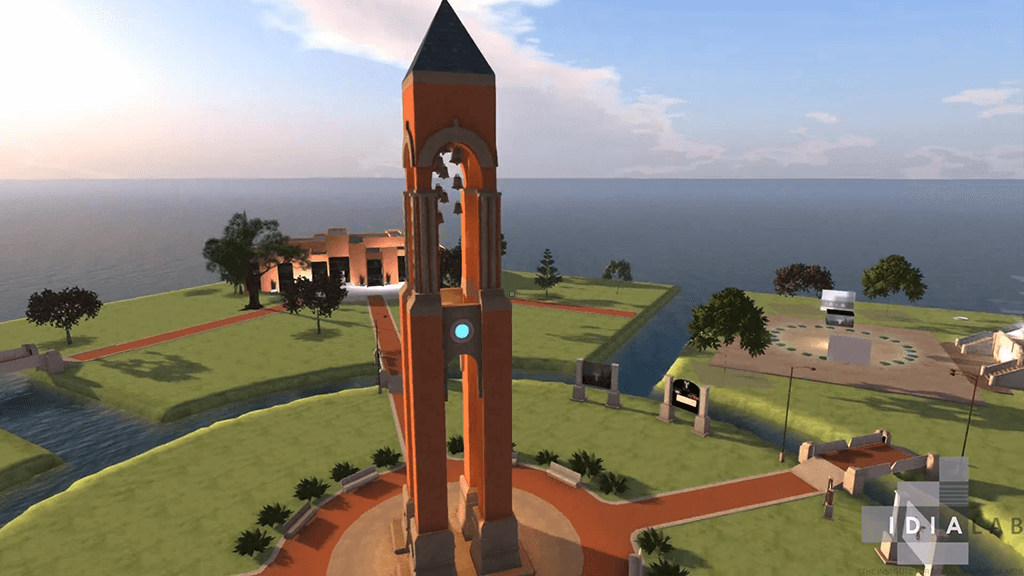

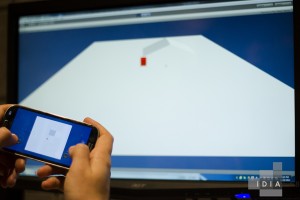

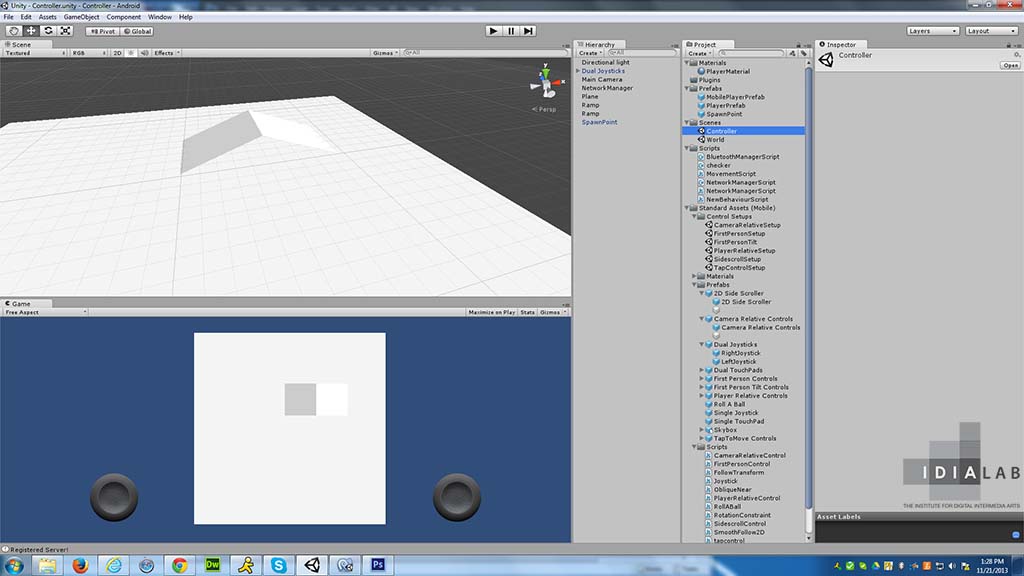

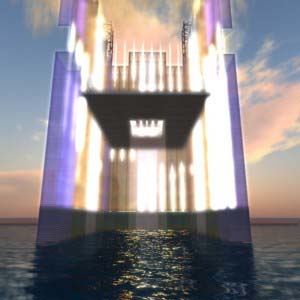

IDIA is pleased to announce REDgrid – a 3D virtual campus to support the educational mission of faculty, staff and students. Ball State community members can use it for free as a virtual gathering space including classroom instruction, small group meetings, presentations, panel discussions, or performances. It is a secure environment hosted and managed solely by Ball State’s IDIA Lab. Virtual classrooms or field experiences can be customized as well as made private to suit your needs. REDgrid also offers a developmental platform for online instruction or community displays.

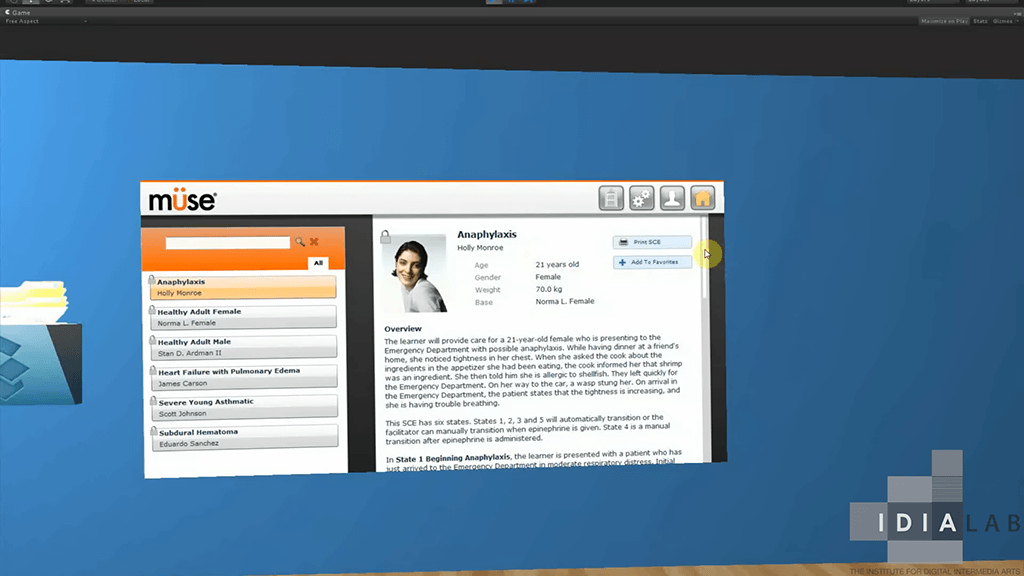

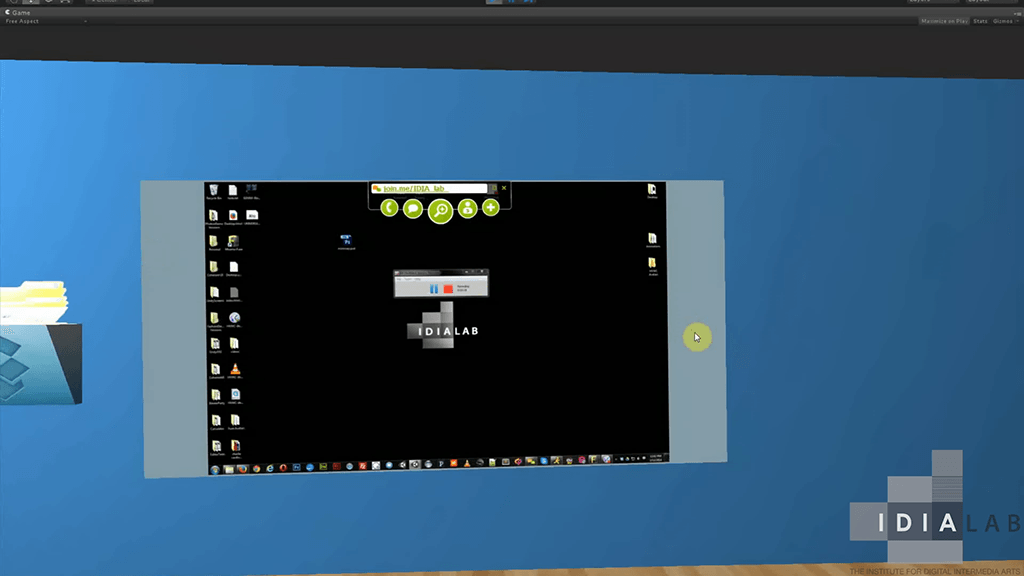

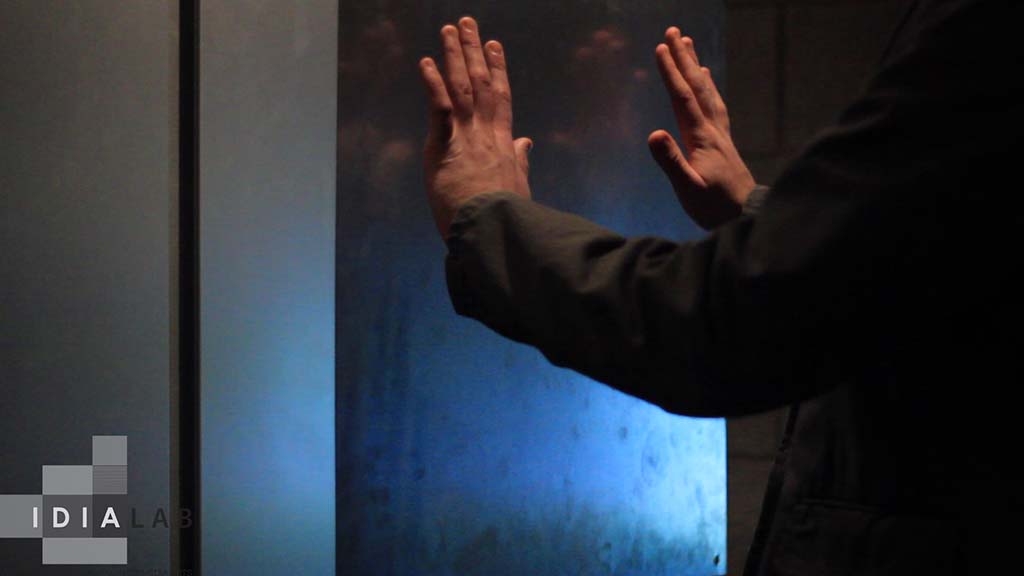

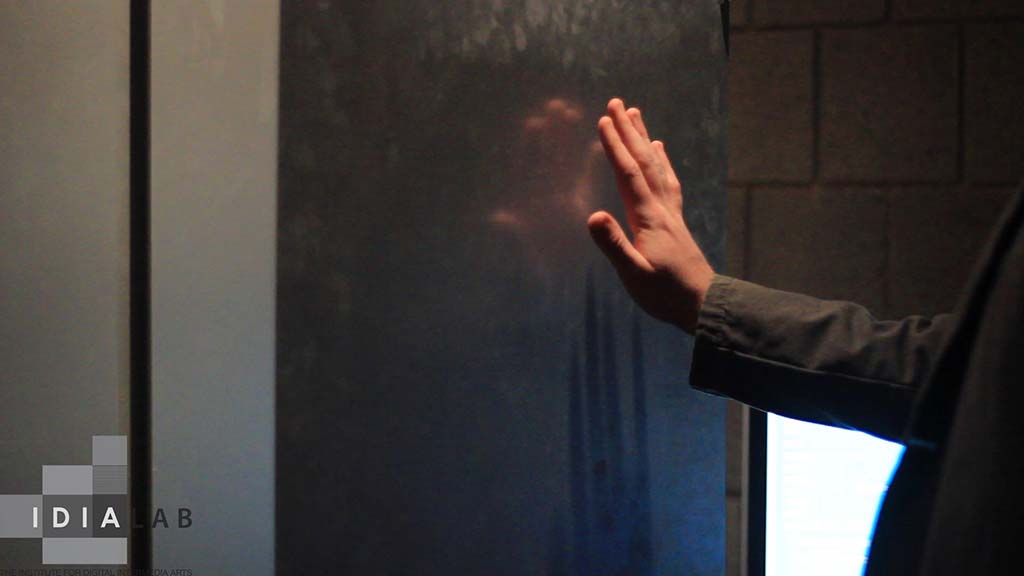

In this video, David gives us a preview of what you are able to do in REDgrid.

Ball State institute creates virtual world to be used for learning, connecting

The Daily News | Amanda Belcher Published 08/31/15 12:27pm Updated 09/01/15 5:15pm

By 2013, the Sims video game series had sold 175 million copies worldwide. Its users could create avatars, build houses—just like in reality.

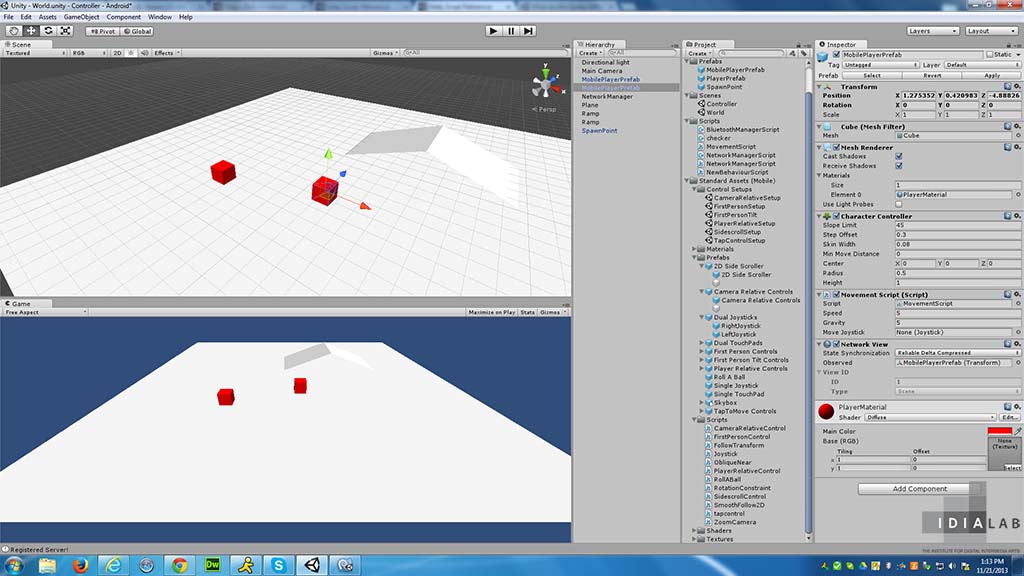

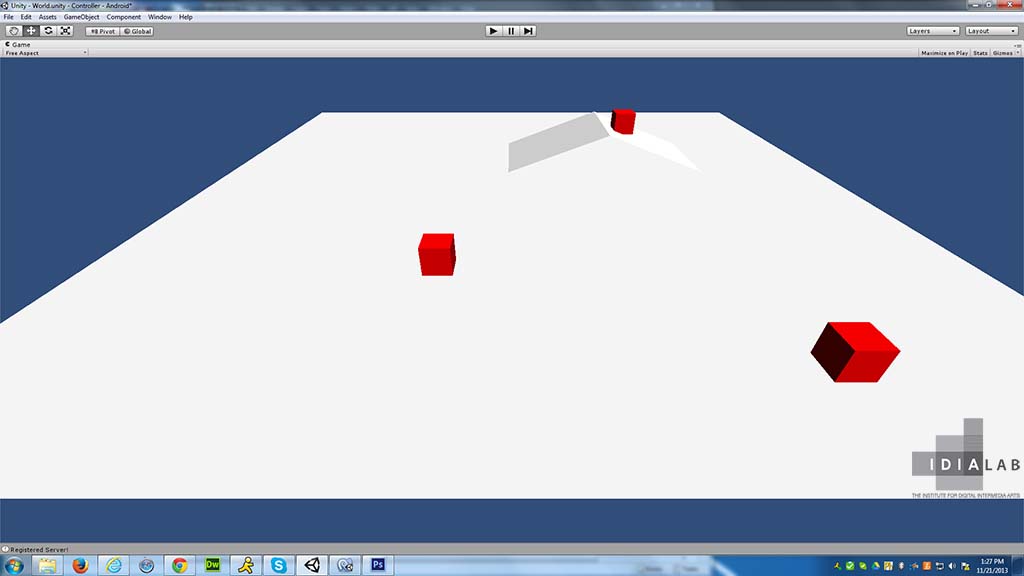

Ball State’s own REDgrid uses a similar concept. Students can make an avatar, walk around a virtual Ball State campus and interact with other avatars via written messages or a headset in this open simulator.

Ball State’s Institute for Digital Intermedia Arts (IDIA) developed REDgrid.

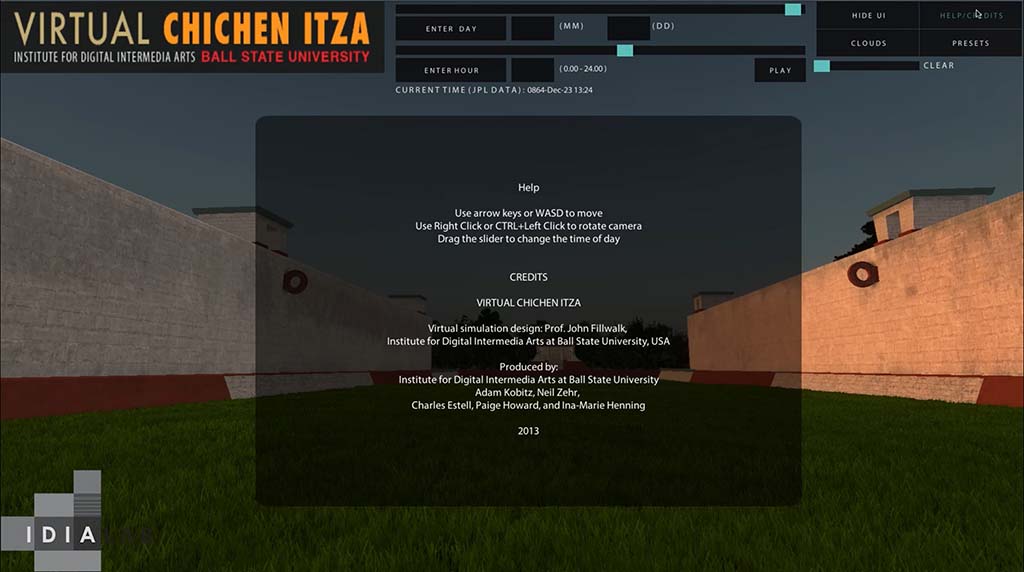

“The server is physically housed at BSU IDIA Lab and was installed with collaboration with Information Technology,” said John Fillwak, the director of Ball State’s IDIA Lab.

When it comes to REDgrid, the possibilities can seem limitless—and some faculty members have already begun testing the simulator’s boundaries.

Mai Kuha, an assistant professor of English, used REDgrid in an honors class as a gender identity project. Students were assigned a gender and told to create an avatar of that gender. This enabled them to observe how people of opposite genders are treated differently.

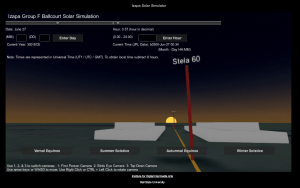

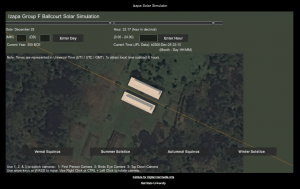

Kuha isn’t the only professor to use REDgrid as a learning tool in the classroom. Ann Blakey, an associate professor of biology, used it in one of her courses for an environmental ethics activity.

Students were assigned a role, such as scientist or environmentalist, and were invited to explore the virtual environment and file a report. The activity gave students the opportunity to see the environment from different perspectives.

Fillwalk envisions even more opportunities for the platform.

“Ball State community members can use it for free as a virtual gathering space including classroom instruction, small group meetings, presentations, panel discussions or performances,” Fillwalk said.

The virtual classrooms and field experiences can be customized to fit each teacher’s needs.

This kind of creative teaching is what Stephen Gasior, an instructor of biology, is looking to expand upon. An enthusiastic voice for REDgrid, Gasior encourages professors and students to utilize this tool. He explains that it gives professors the ability to shape an environment and allows students to experience any number of events or situations.

REDgrid isn’t just for academic purposes either, Gasior said. Students can use the avatars for social experiments too.

“REDgrid represents Ball State’s campus, and international or online students get [the] feeling of being in the campus environment,” he said.

Fillwalk fully understands this aspect of REDgrid.

“We designed it to be a flexible platform connecting BSU faculty and students to international,” he said.

Connection is key with REDgrid. Gasior stressed that it can help build and connect communities—Ball State or otherwise.

Ball State is already working with faculties of other universities so the tool can be used on campuses other than just Ball State’s, Fillwalk said.

“The platform could certainly continue to be expanded,” he said.

Gasior has plans for the future—like researching developmental grants and expanding REDgrid. But IDIA staff can only do so much.

“People who come to REDgrid and have a passion for it will shape the road it will take,” Gasior said.

http://www.ballstatedaily.com/article/2015/08/redgrid-virtual-world