Virtual Hadrian’s Villa Launch at Harvard Center

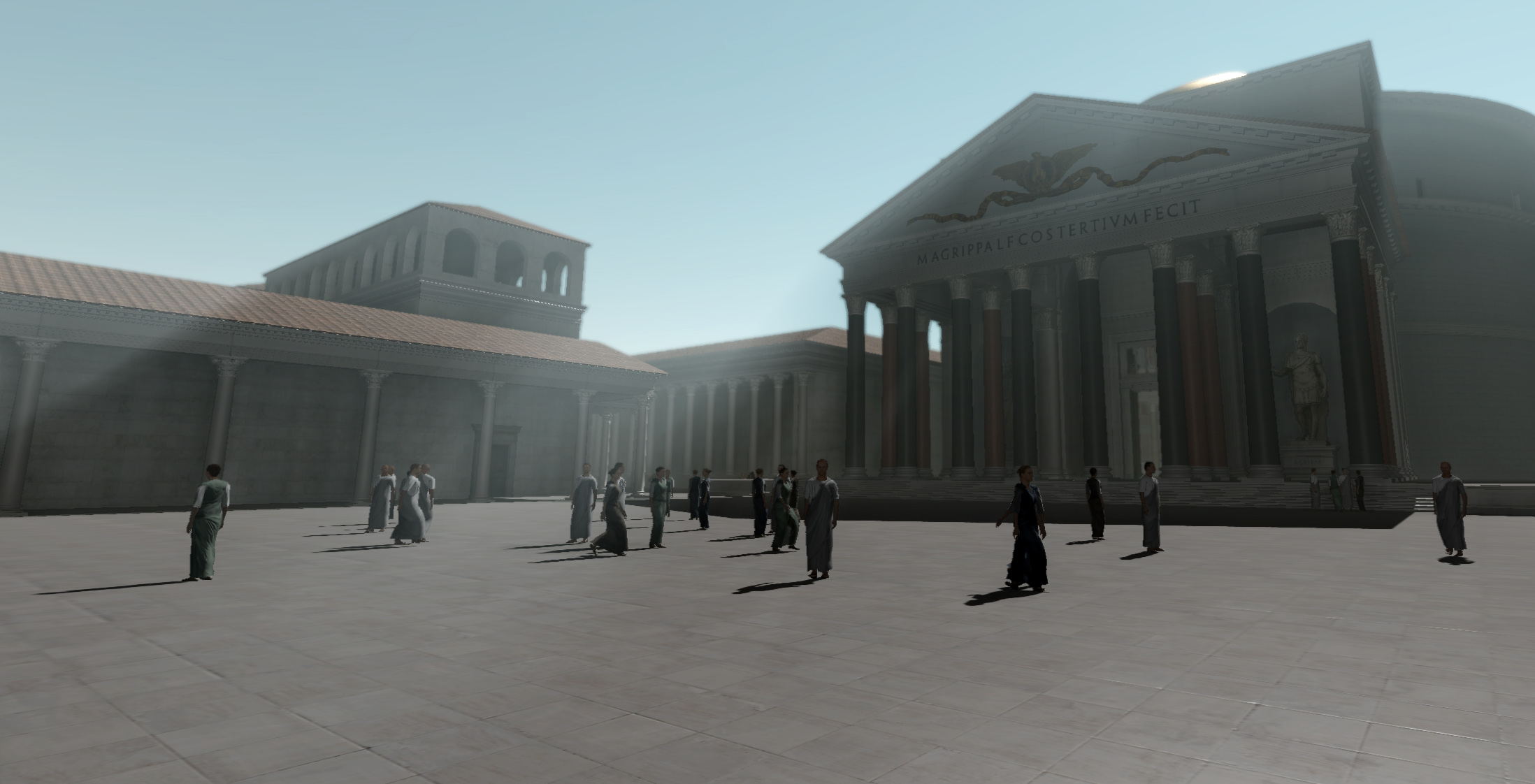

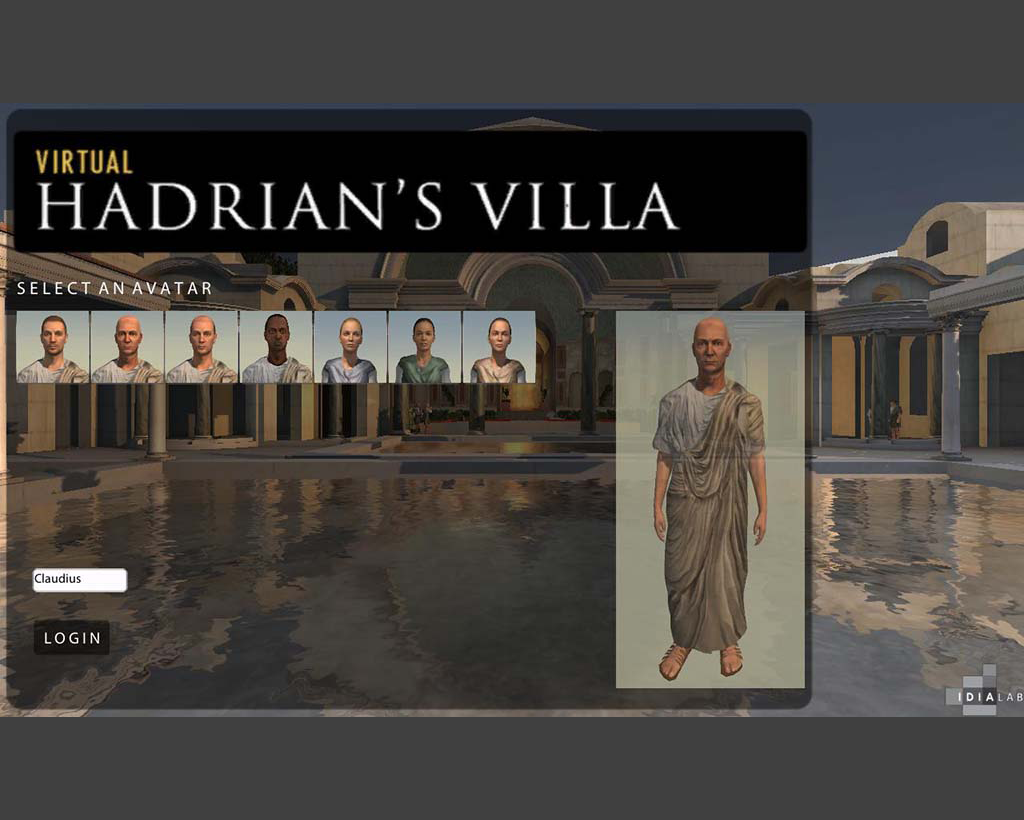

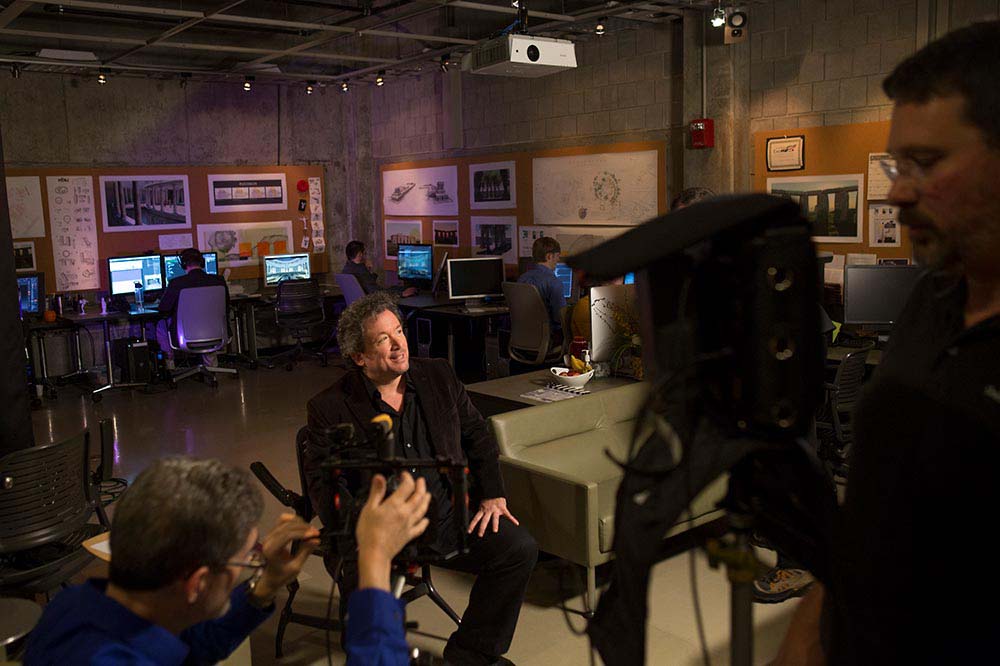

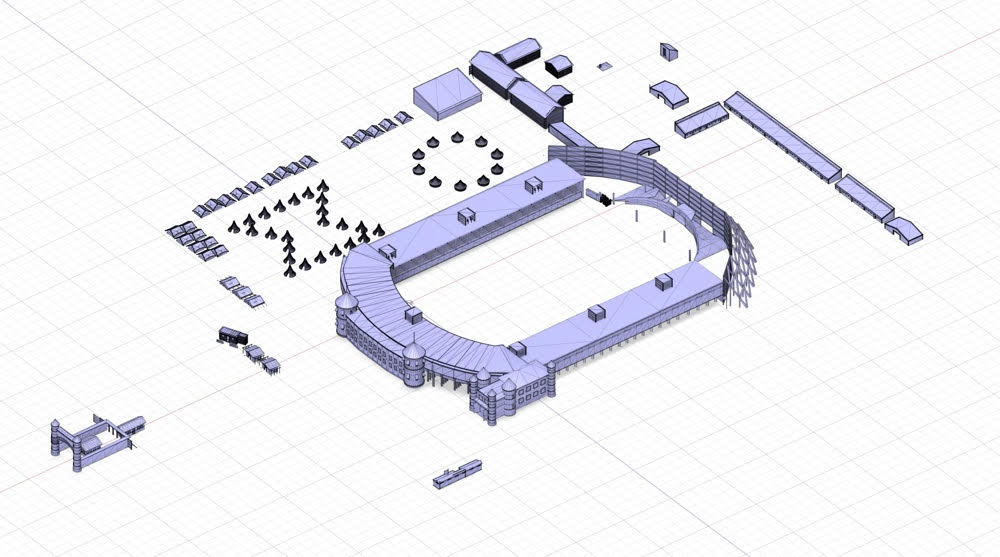

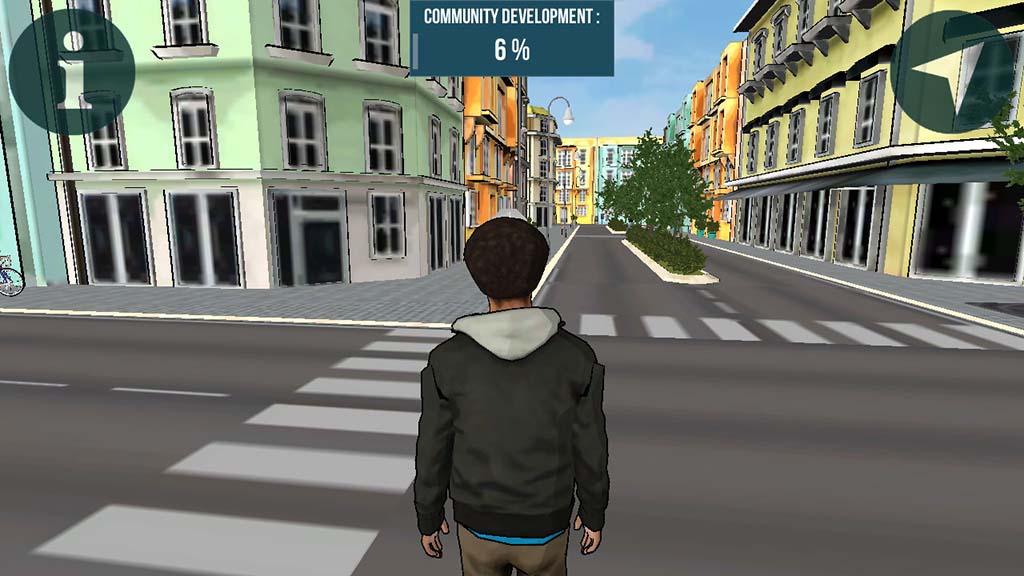

IDIA Lab has designed a virtual simulation of the villa of the Roman Emperor Hadrian, which is a UNESCO World Heritage site located outside of Rome in Tivoli, Italy. This project has been produced in collaboration with the Virtual World Heritage Laboratory (VWHL) at Indiana University (IU), directed by Dr. Bernard Frischer and funded by the National Science Foundation. This large-scale recreation virtually interprets the entire villa complex in consultation with the world’s foremost Villa scholars. The project has been authored in the game engine of Unity as a live virtual multi-user online learning environment that allows students and visitors to immerse themselves in all aspects of the simulated villa. The project launched at the Harvard Center for Hellenic Studies in Washington, DC on November 22, 2013. The webplayer versions of the Hadrian’s Villa project are funded through a grant from the Mellon Foundation.

The Launch of the Digital Hadrian’s Villa Project

The Center for Hellenic Studies, Ball State University, and Indiana University

Friday, November 22, 2013

Harvard Center for Hellenic Studies

Washington, DC

Speakers:

John Fillwalk, IDIA Lab, BSU

Bernard Frischer, VWHL, IU

Marina Sapelli Ragni

The presentations included previews of:

The Virtual World of Hadrian’s Villa

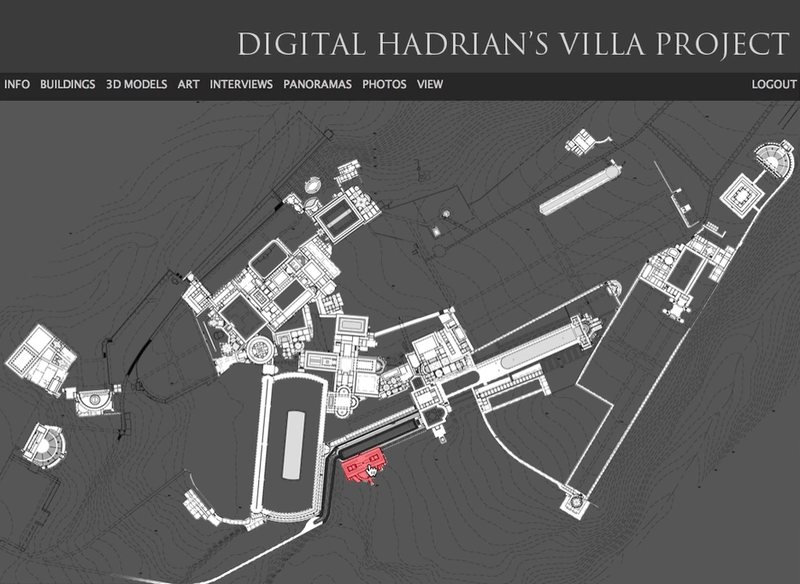

The Digital Hadrian’s Villa website

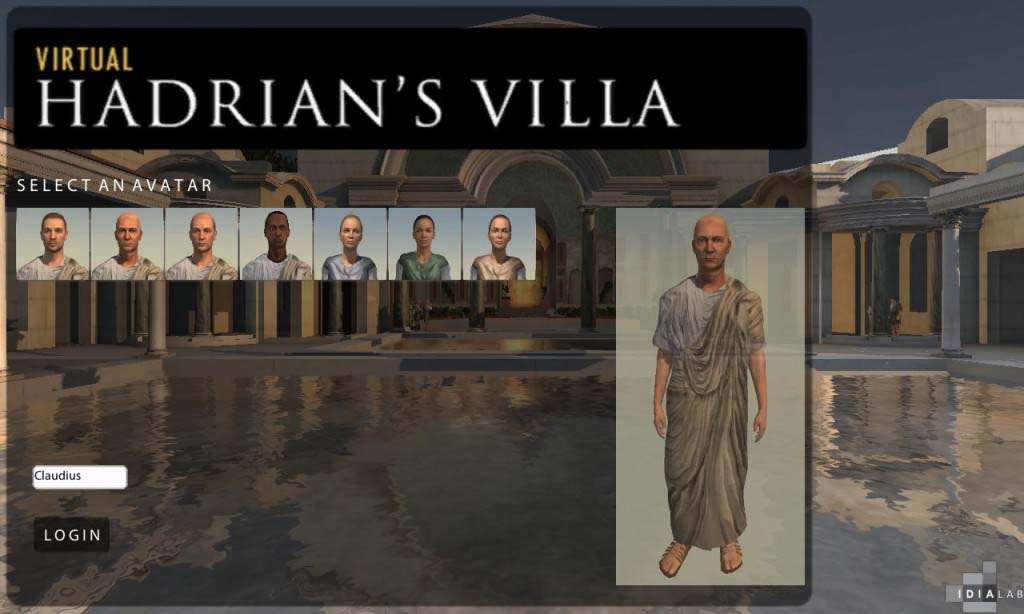

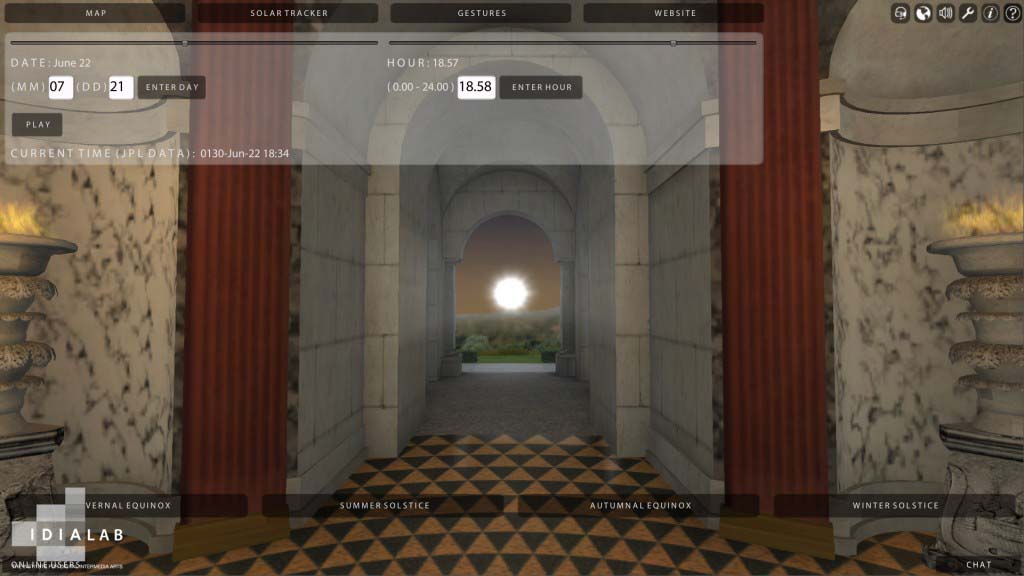

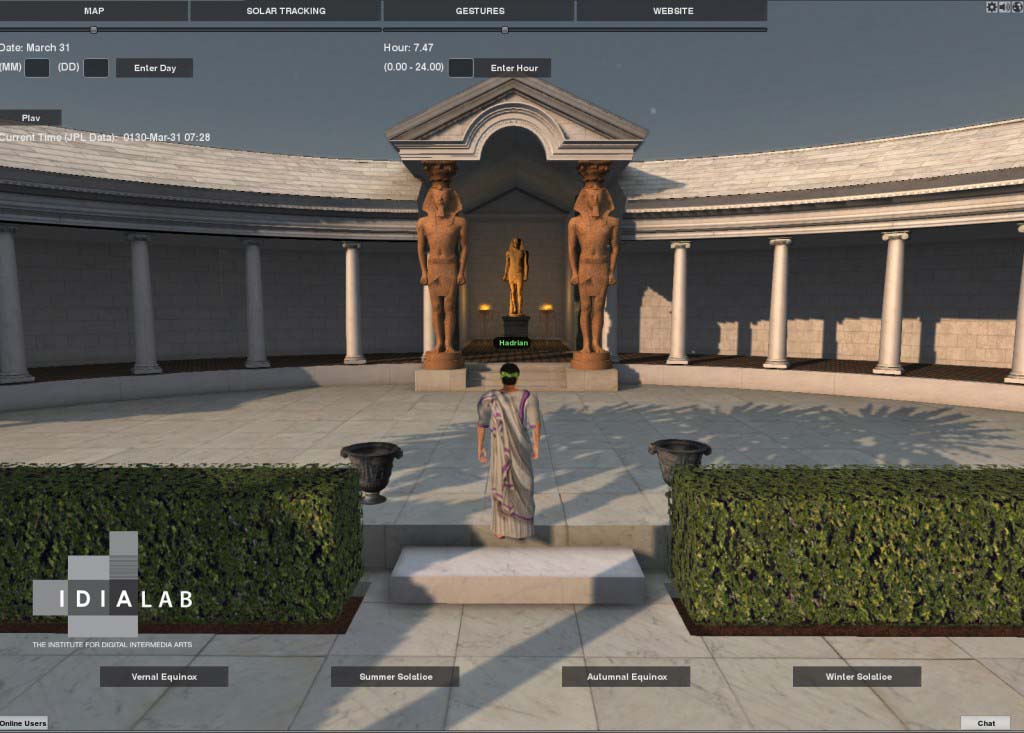

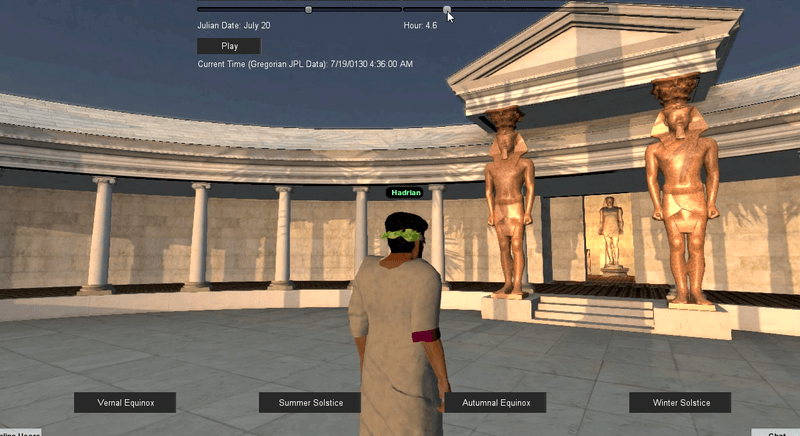

The project not only recreates the villa buildings but also includes a complete Roman avatar system, non-player characters with artificial intelligence, furniture, appropriate vegetation, dynamic atmospheric system and sophisticated user interface. The interface provides learning, navigation, reporting and assessment opportunities and also allows users to change the position of the sun to any date in 130 AD using data from the Horizons database at JPL NASA – testing theses of astro-alignments of architectural features during solstices and equinoxes. Learning communities are briefed on the culture and history of the villa and learn the virtual environment prior to immersing themselves within it. The avatar system allows for visitors to enter the world selecting class and gender – already being aware of the customs and behavior of the Roman aristocracy, soldier, slave or politician.

Khan Academy Walkthrough of Virtual Hadrian’s Villa: http://youtu.be/Nu_6X04EGHk

Link to Virtual Hadrian’s Villa Walkthrough: http://youtu.be/tk7B012q7Eg

The Digital Hadrian’s Villa Project:

Virtual World Technology as an Aid to Finding Alignments between

Built and Celestial Features

Bernard Frischer1

John Fillwalk2

1Director, Virtual World Heritage Laboratory, University of Virginia

2Director, IDIA Lab, Ball State University

Hadrian’s Villa is the best known and best preserved of the imperial villas built in the hinterland of Rome by emperors such as Nero, Domitian, and Trajan during the first and second centuries CE. A World Heritage site, Hadrian’s Villa covers at least 120 hectares and consists of ca. 30 major building complexes. Hadrian built this government retreat about 20 miles east of Rome between 117, when he became emperor, and 138 CE, the year he died. The site has been explored since the 15th century and in recent decades has been the object of intense study, excavation, and conservation (for a survey of recent work, see Mari 2010).

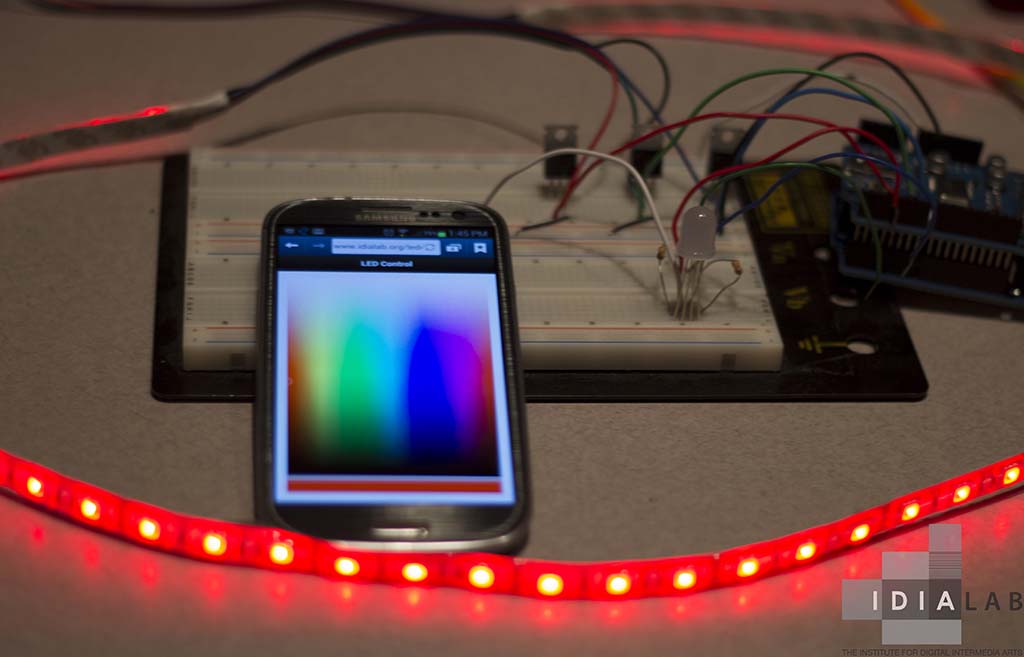

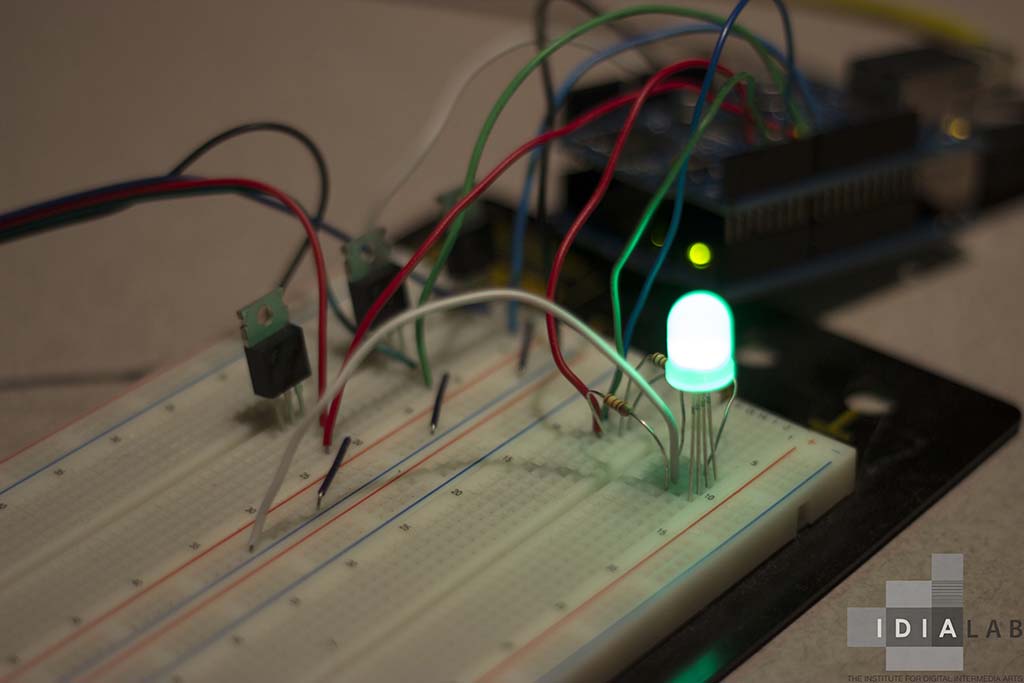

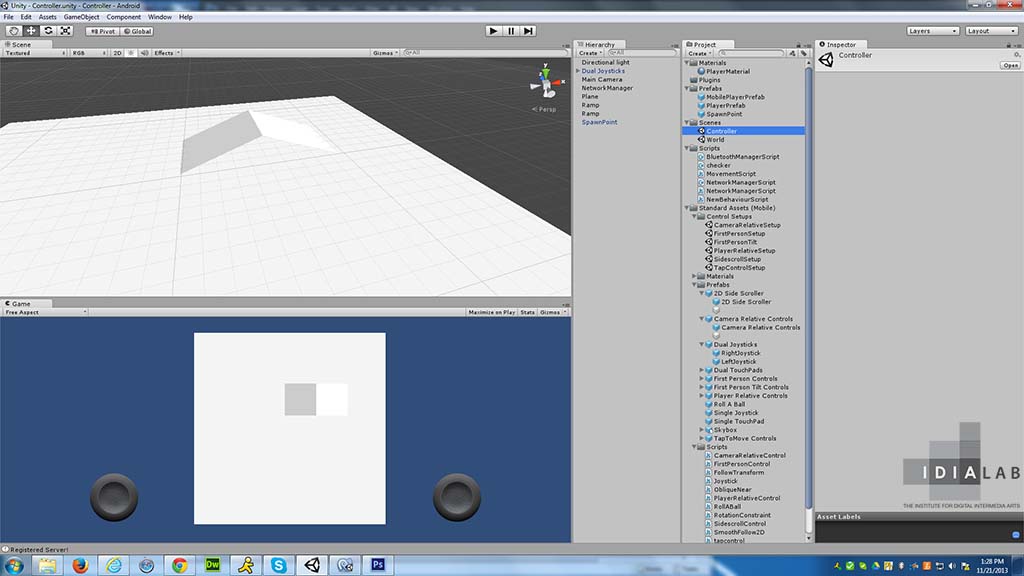

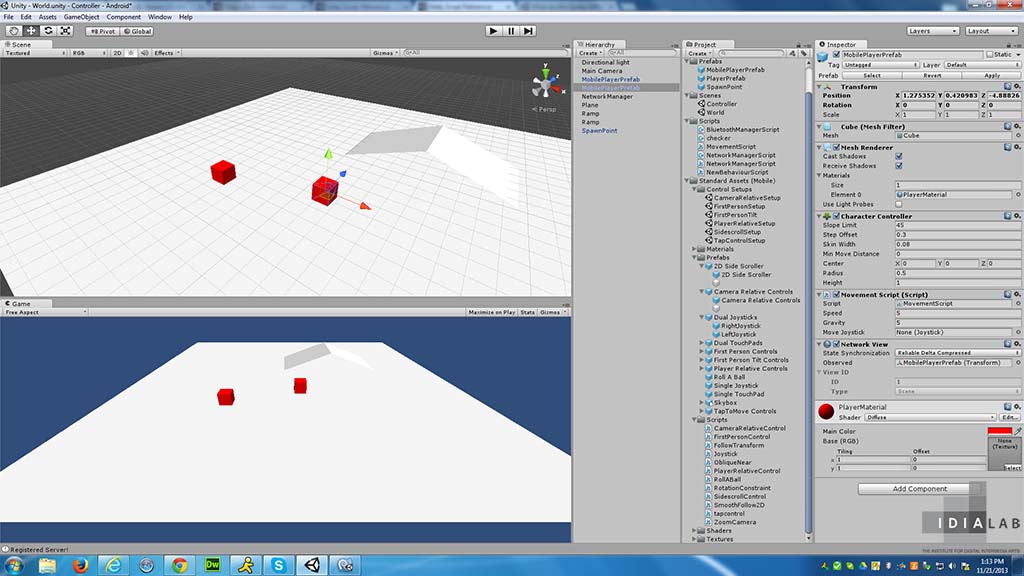

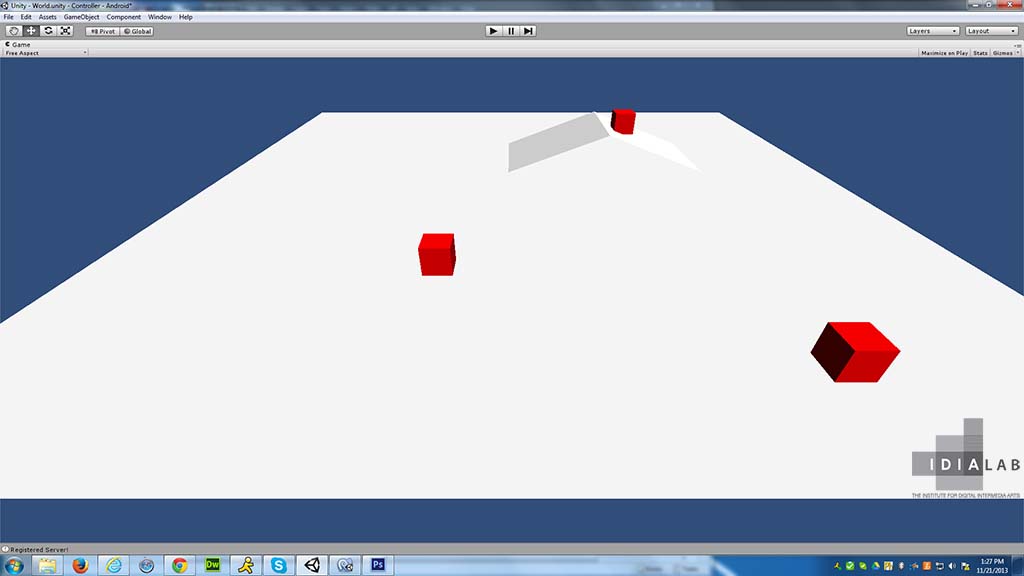

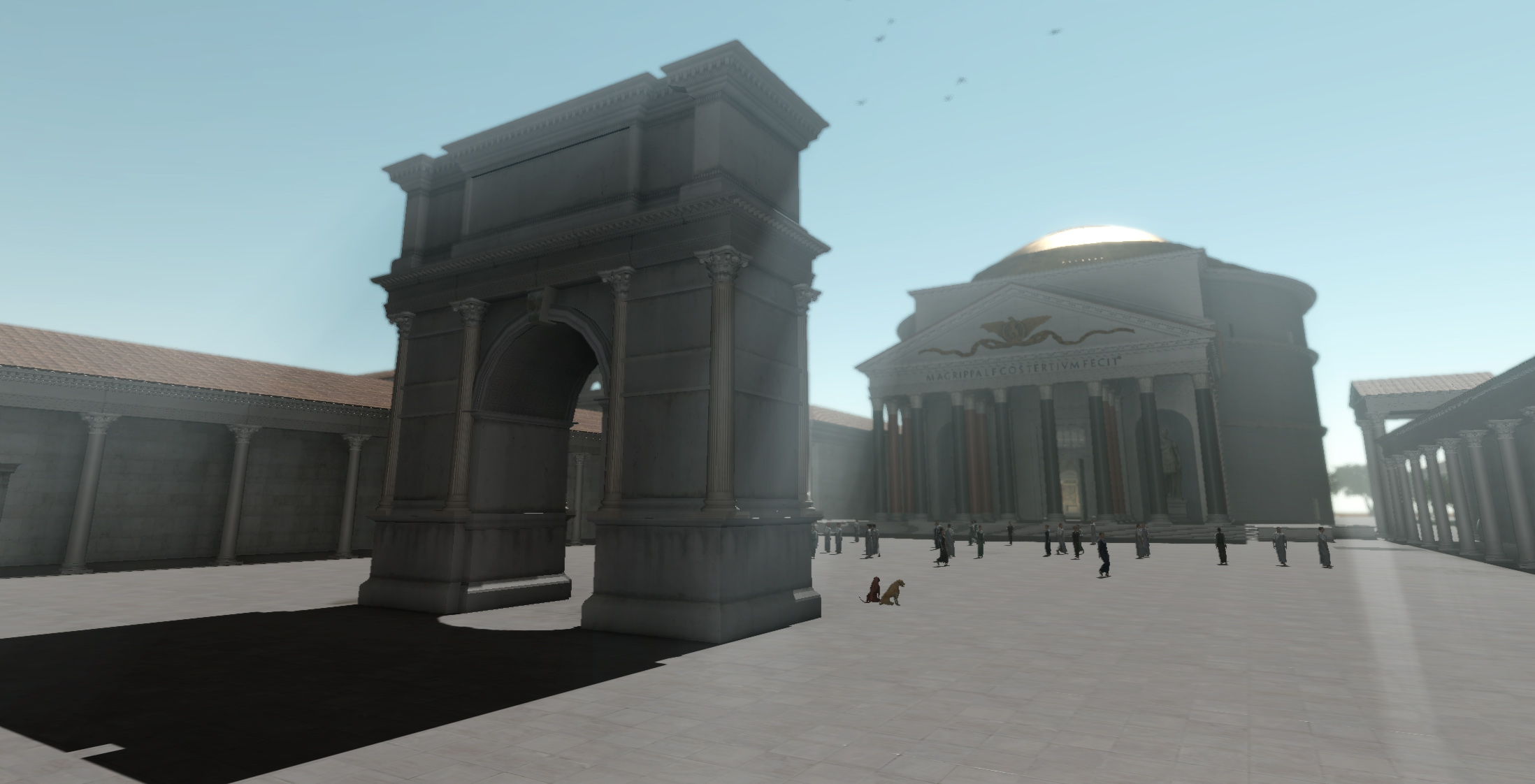

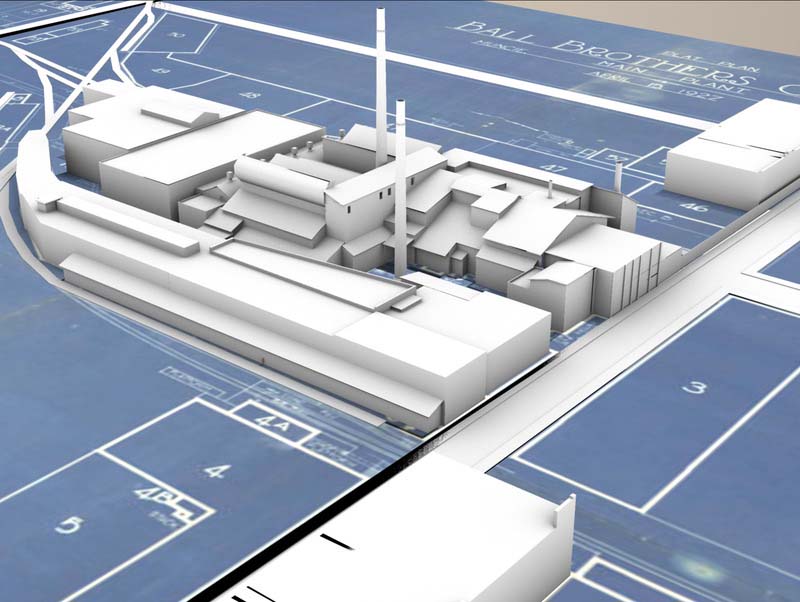

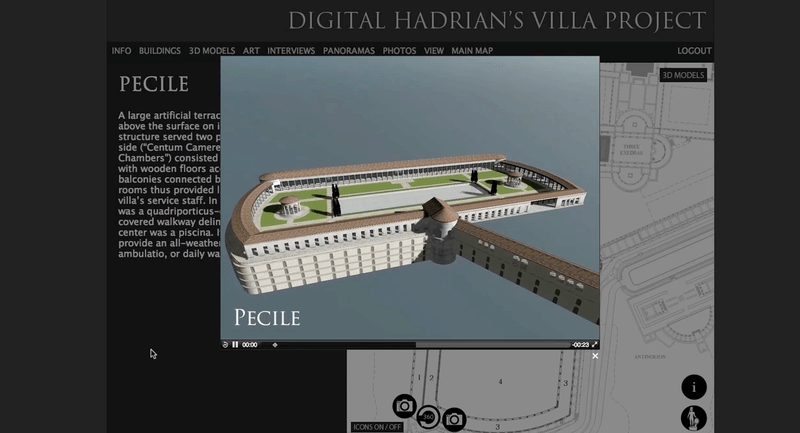

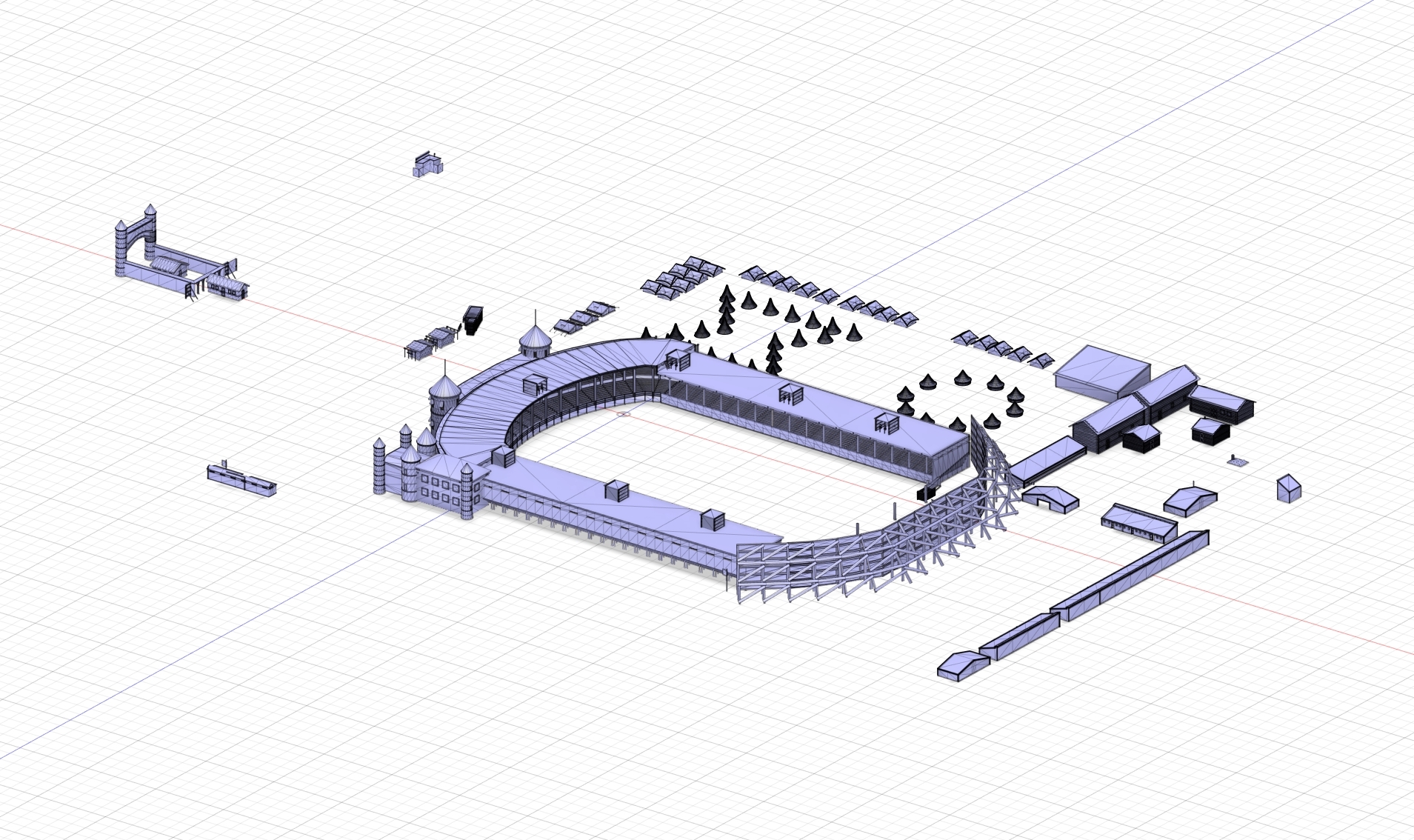

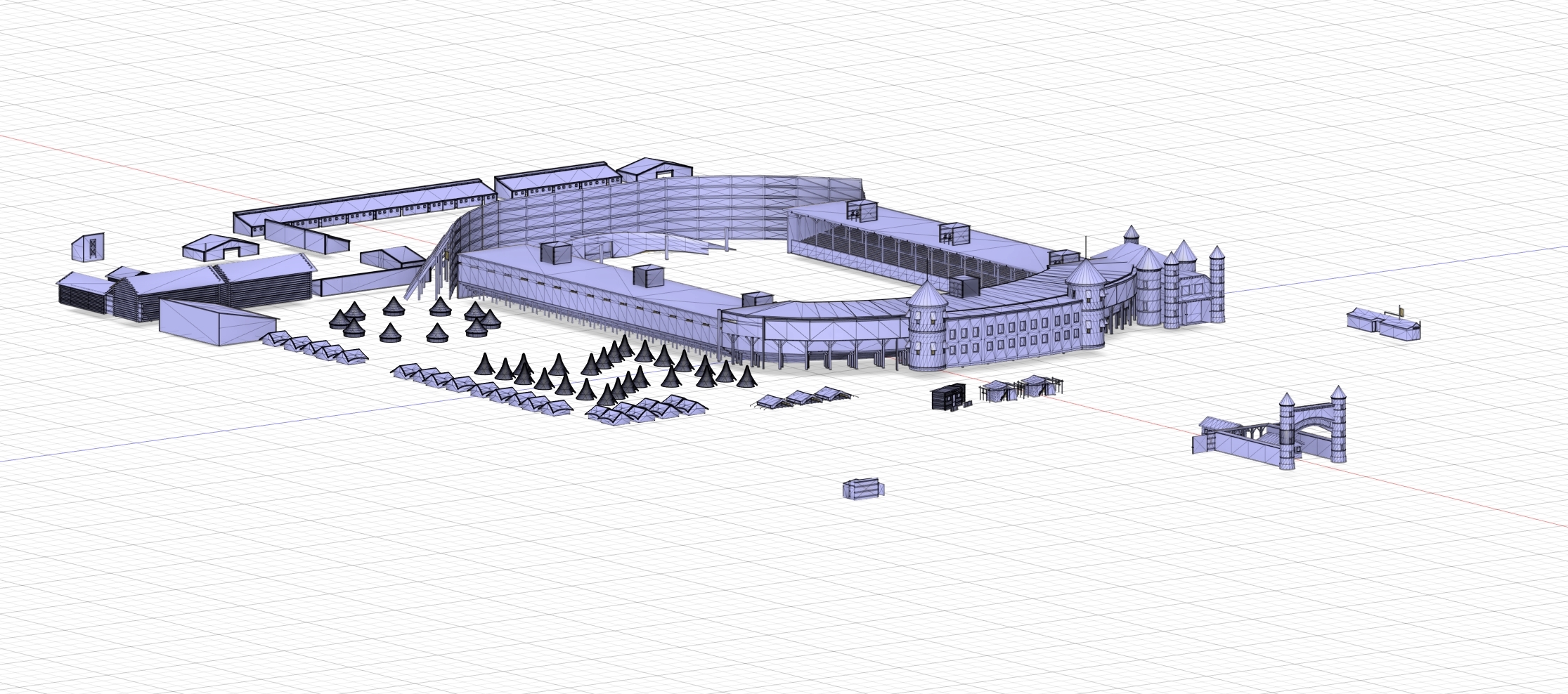

From 2006 to 20011, with the generous support of the National Science Foundation[1]and a private sponsor, the Virtual World Heritage Laboratory created a 3D restoration model of the entire site authored in 3DS Max. From January to April 2012, Ball State University’s Institute for Digital Intermedia Arts (IDIA Lab) converted the 3D model to Unity 3D, a virtual world (VW) platform, so that it could be explored interactively, be populated by avatars of members of the imperial court, and could be published on the Internet along with a related 2D website that presents the documentation undergirding the 3D model.

The 3D restoration model and related VW were made in close collaboration with many of the scholars who have written the most recent studies on the villa.[2] Our goal was to ensure that all the main elements—from terrain, gardens, and buildings to furnishings and avatars—were evidence-based. Once finished, the was used in two research projects.

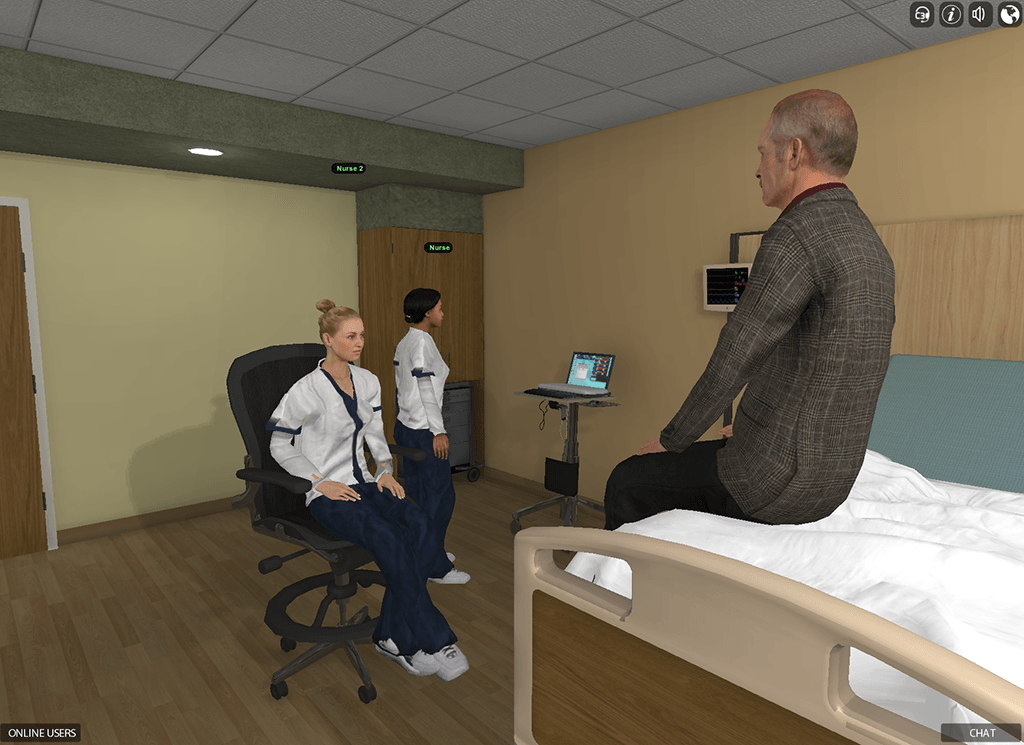

The first project was a NSF-sponsored study of the usefulness of VW technology in archaeological education and research. We used the virtual villa in undergraduate classes at Xavier University and the University of Virginia to investigate the thesis of two recent studies by project advisors Michael Ytterberg and Federica Chiappetta about how this enormous built space was used by six different groups of ancient Romans, ranging from the Emperor and Empress to normal citizens and slaves (Ytterberg 2005; Chiappetta 2008). Avatars representing these groups have been created and are being operated by undergraduate students as a Problem‐Based Learning (PBL) experience. They are observed by subject experts, who are using the data generated to test and, if necessary, refine the initial theses about how circulation through the villa was handled. The results are still being evaluated. Preliminary indications are that the data show that the combination of VW used in a PBL educational context is very effective in taking advantage of the known connection between between the hippocampus and long-term learning, especially when the information to be mastered is spatial (Kandel 2007).

The second project involved use of the VW for some new archaeoastronomical studies. Most of our advisors’ publications, like the older work by archaeologists that preceded them, have concentrated on archaeological documentation, restoration, formal, and functional analysis. The latest research by advisor De Franceschini and her collaborator Veneziano (2011) combined formal and functional analysis: it considered the alignment of certain important parts of the villa in relation to the sun’s apparent path through the sky on significant dates such as the solstices. In their recent book they showed how two features of the villa are aligned with the solar solstices: the Temple of Apollo in the Accademia; and the Roccabruna. We used the VW to extend their research to other areas of the villa, taking advantage of 3D technology to restore the sun to the right place in the sky and also to restore the damage to the architecture of the villa, as De Franceschini and Veneziano had independently suggested be done before they learned about our digital model of the villa.

The work of De Franceschini and Veneziano is innovative. Archaeastronomy has become an accepted field of study in recent decades, and a considerable amount of work has been done in Old and New World archaeology. In Roman archaeology, however, this approach is still rarely encountered. Significantly, one of the few compelling studies concerns the most famous Hadrianic building: the Pantheon in Rome. Hannah and Magli 2009 and Hannah 2011 have shown a number of solar alignments in the building, of which the most notable are the sun’s illumination of the entrance doorway at noon on April 21; and the view of sunset silhouetting the statue of Hadrian as Sun god on a four-horse chariot atop the Mausoleum of Hadrian as viewed from the middle of the Pantheon’s plaza at sunset on the summer solstice. Like the summer solstice, April 21 is also a significant date: on it occurred the annual festival in Rome known as the Parilia (re-named the Romaia by Hadrian),[3] which celebrated the founding of Rome.

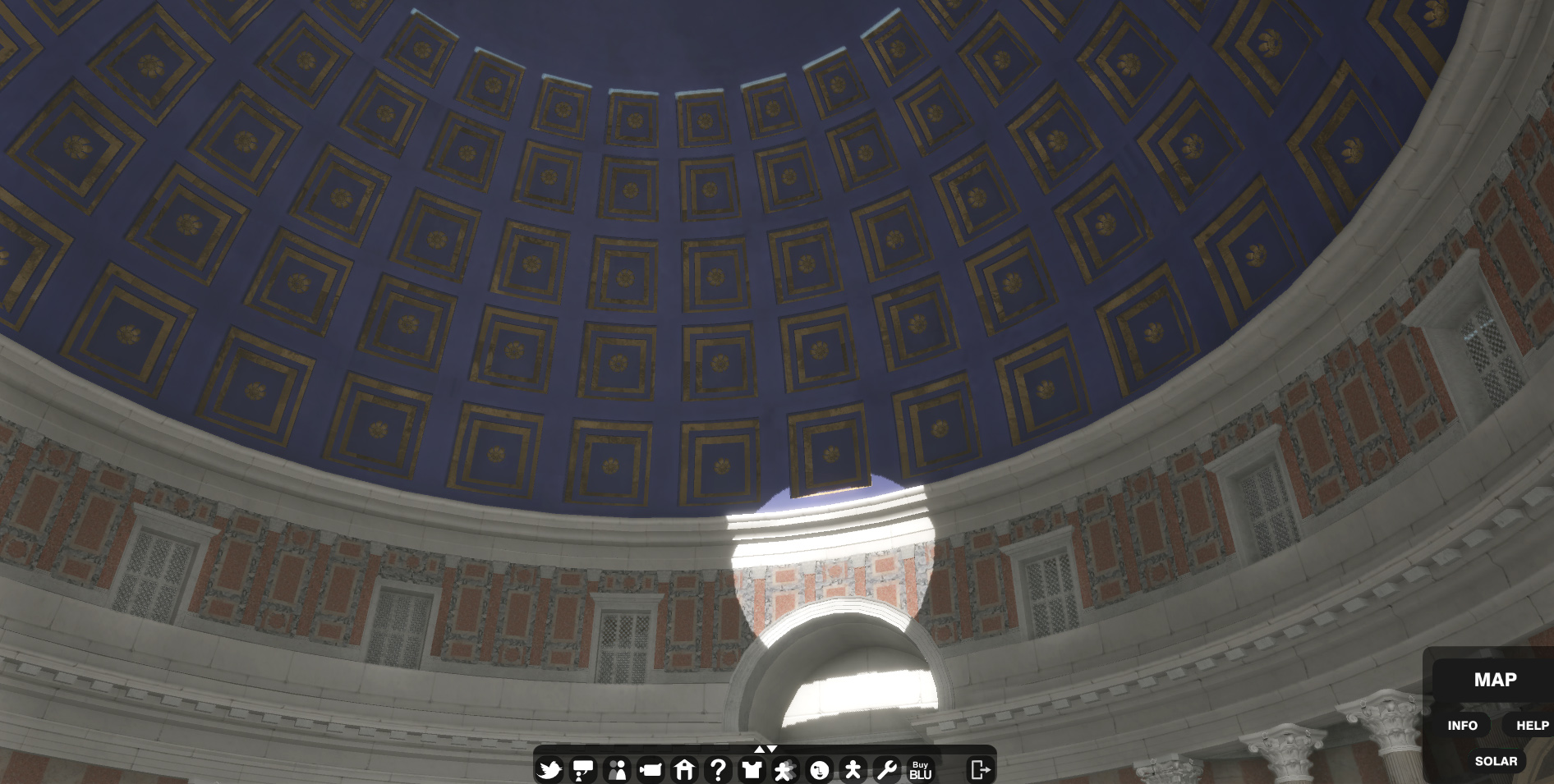

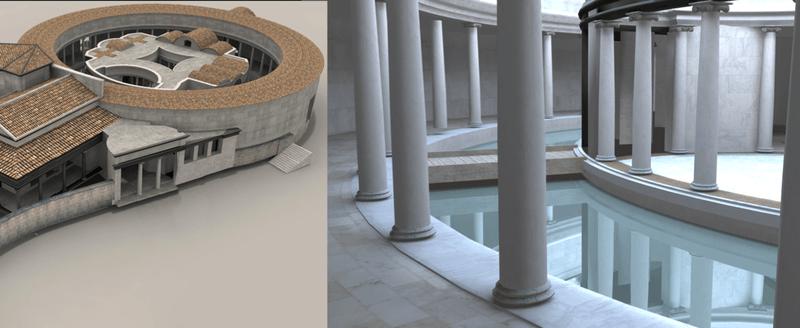

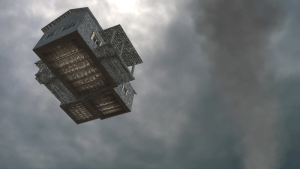

De Franceschini and Veneziano pursued an observation of Mangurian and Ray (2008) to document an impressive example of solar alignment at Hadrian’s Villa involving the tower known as Roccabruna at the western end of the villa. Originally, a tower-like structure topped by a round temple, what remains today is the well-preserved, massive lower floor. The main entrance is located on the northwestern side to the right and gives access to a large circular hall covered by a dome. The dome is punctuated by an odd feature: five conduits that are wider on the outside than on the inside (figure 1).

What is the function of these unusual conduits? They have no known parallel in Roman architecture. After asking themselves this same question, on June 21st, 1988, the day of summer solstice, the American architects Robert Mangurian and Mary Ann Ray went to Roccabruna at sunset, and discovered the extraordinary light phenomena which occur there. At sunset the Sun enters through the main door illuminating the niche on the opposite side, something that happens during most of the summer days. But only in the days of the summer Solstice the Sun penetrates also into the conduit located above that door: its rays come out from the slot inside the dome projecting a rectangular light blade on the opposite side of the dome. In June 2009, De Franceschini verified the findings of Mangurian and Ray. However, they know that the apparent path of the Sun through the sky changes slightly each year, so that in the nearly 1880 years separating us from Hadrian, the precise effect of the alignment has been lost. As they noted, only a computer simulation can recreate the original experience of being in the lower sanctuary at Roccabruna at sunset on the summer solstice during the reign of Hadrian.

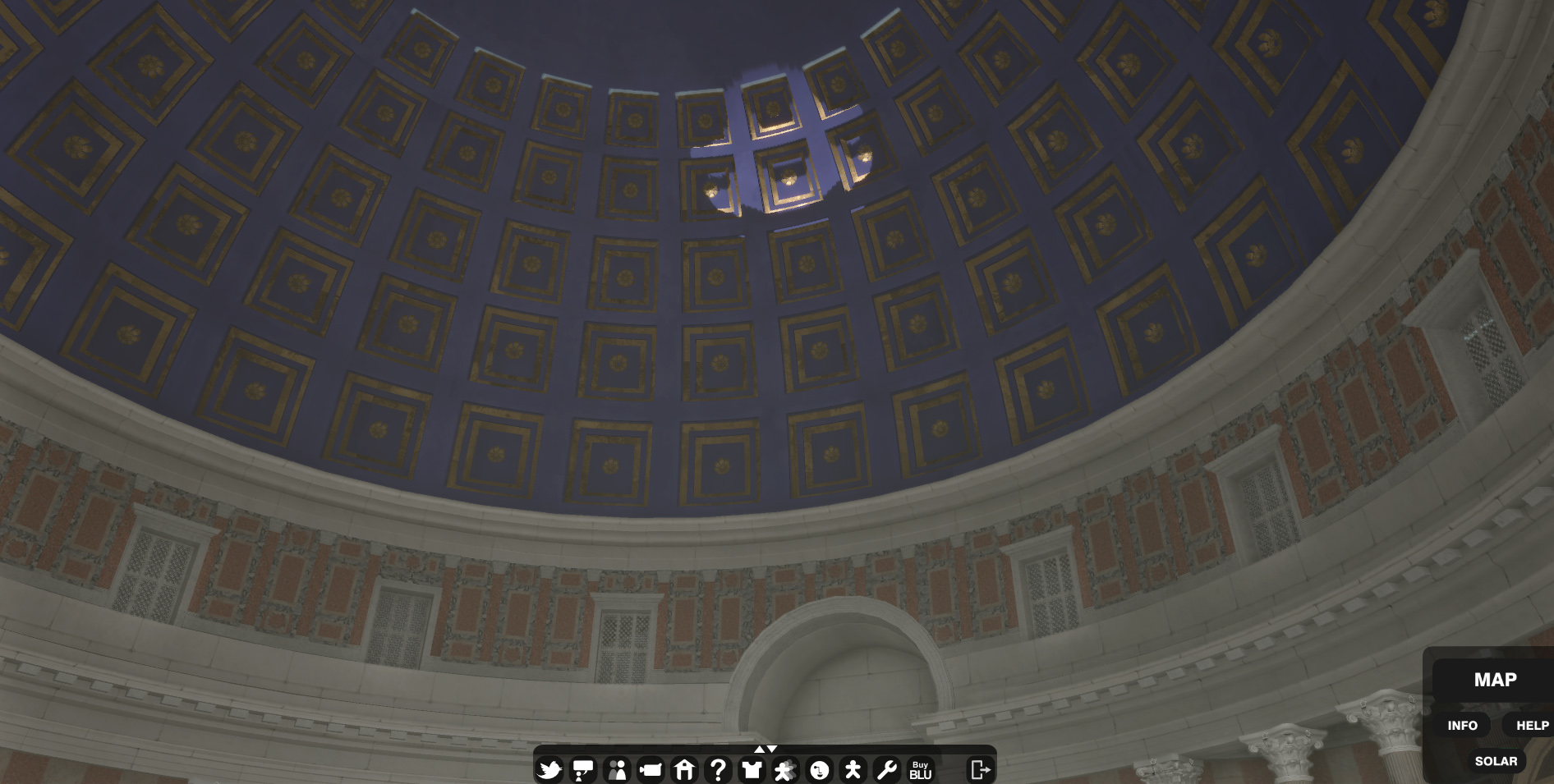

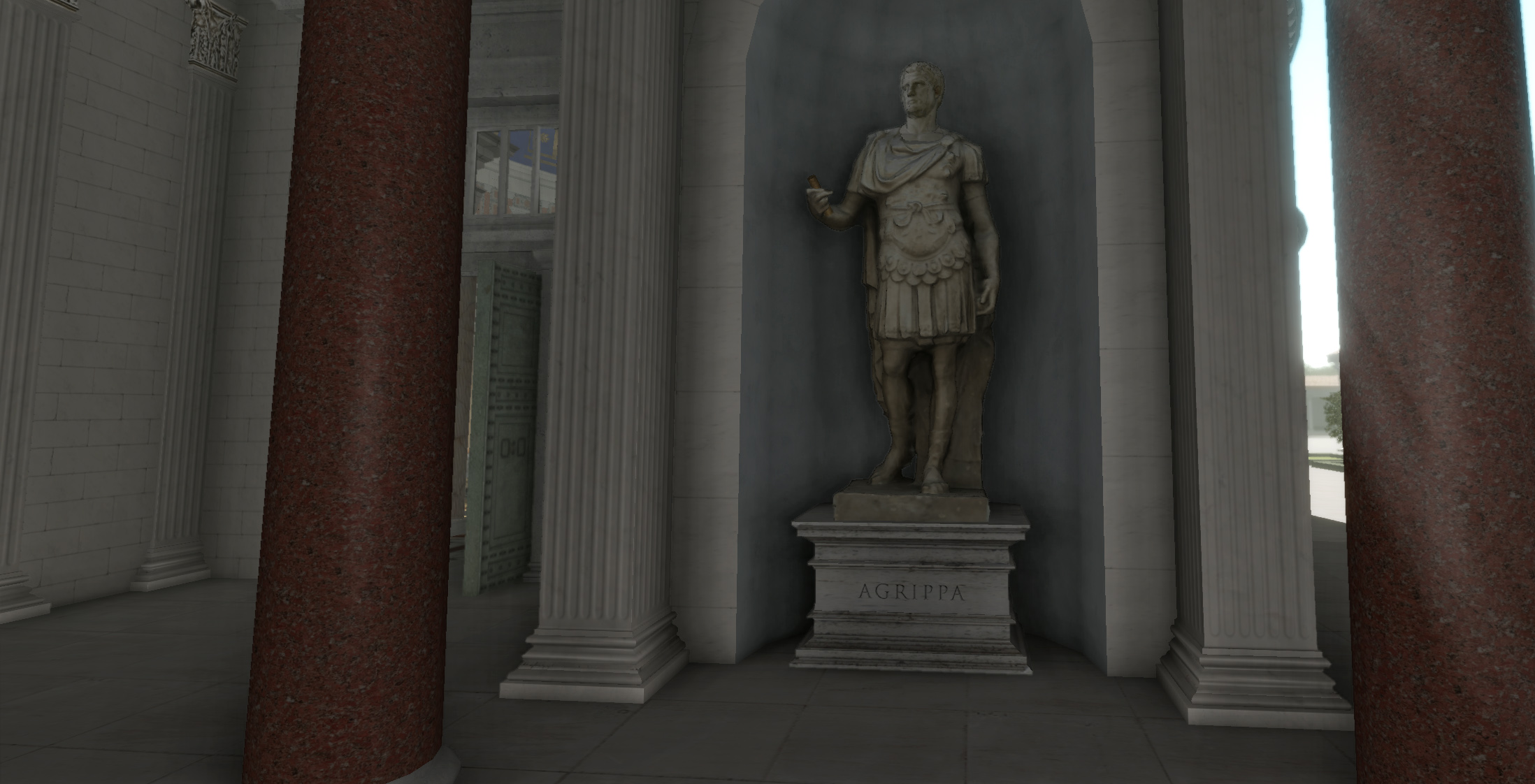

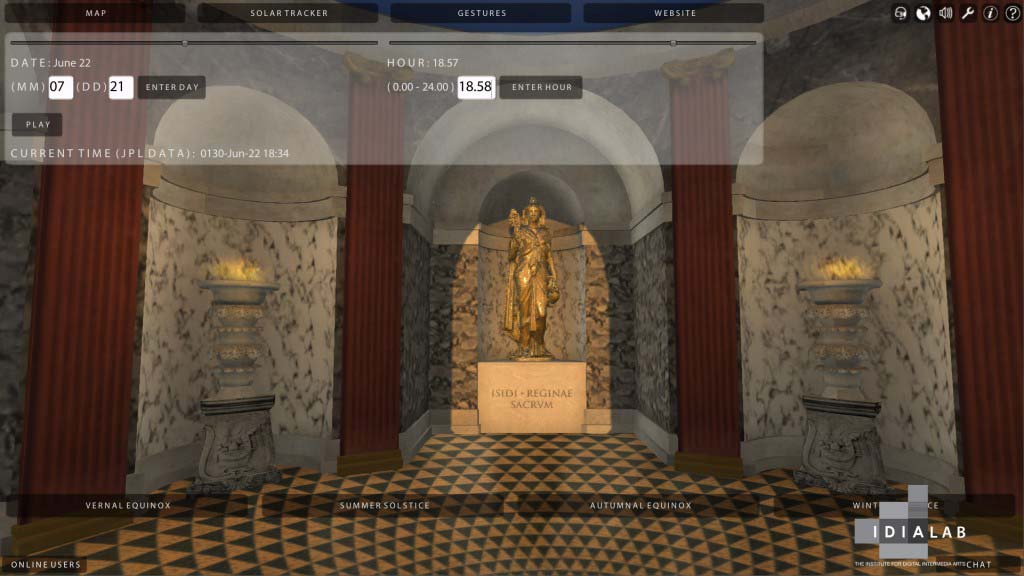

Once we had our 3D model of the site, we were able to obtain from NASA’s Horizons system[4] the correct azimuthal data for the year AD 130 and put the sun into the sky at sunset on the summer solstice. Following the lead of De Franceschini, who in the meantime had become a consultant to our project, we put into the niche one of the four statues of the Egyptian sky goddess Isis that were found at the Villa. De Franceschini chose Isis because first of all, there is no question there was a statue in this niche so we need to put something there; and the two flanking niches had candelabra, whose bases are preserved and are decorated with Isiac iconography. Moreover, Isis’ festival in Rome was on the summer solstice. So we scanned and digitally restored one of the several statues of Isis from the villa and put it into the central niche. Finally, for the dome, which we know from surviving paint was blue and therefore had the famous “dome of heaven” motif (Lehmann 1945), we followed De Franceschini in restoring a zodiac set up in such a way that the sign of Gemini is over the statue niche since the last day of Gemini is the summer solstice. Our zodiac is adapted from the great Sun God mosaic in the Rheinisches Landesmuseum in Bonn, which kindly gave us permission to use it.

As can be seen in figure 2, when we restored the sun in the right position in the sky dome for sunset on the summer solstice (June 21) of 130 CE in our 3DS Max model of Roccabruna, the sunlight coming through the main doorway illuminated the statue of Isis in the statue niche, and the light entering through the conduit lit up the sign of Gemini painted on the cupola. So we were able to confirm the Mangurian-Ray thesis.

The approach we have taken in our Roccabruna project is deductive: Mangurian and Ray noted the strange feature of the conduits punctuating the cupola of Roccabruna; they hypothesized a solar alignment. De Franceschini and Veneziano agreed and for various reasons we don’t need to go into today, they put a statue of Isis into the statue niche. We set up the conditions in which these hypotheses could be tested and were able to verify them.

But surely, if there is one such alignment at the villa of the same emperor who was responsible for the Pantheon, there may be others. But the villa is very big—covering over 100 hectares—and has 30 major building complexes, most larger than Roccabruna. Moreover, such alignments could just as easily involve astrological features such as the Moon and the planets. Faced with this level of complexity, the best methodological way forward in searching for new alignments is clearly inductive and empirical. This is one reason why we asked the Institute for Digital Intermedia Arts (IDIA Lab) of Ball State University to create a multi-user virtual world based in Unity 3D from our 3DS Max model.

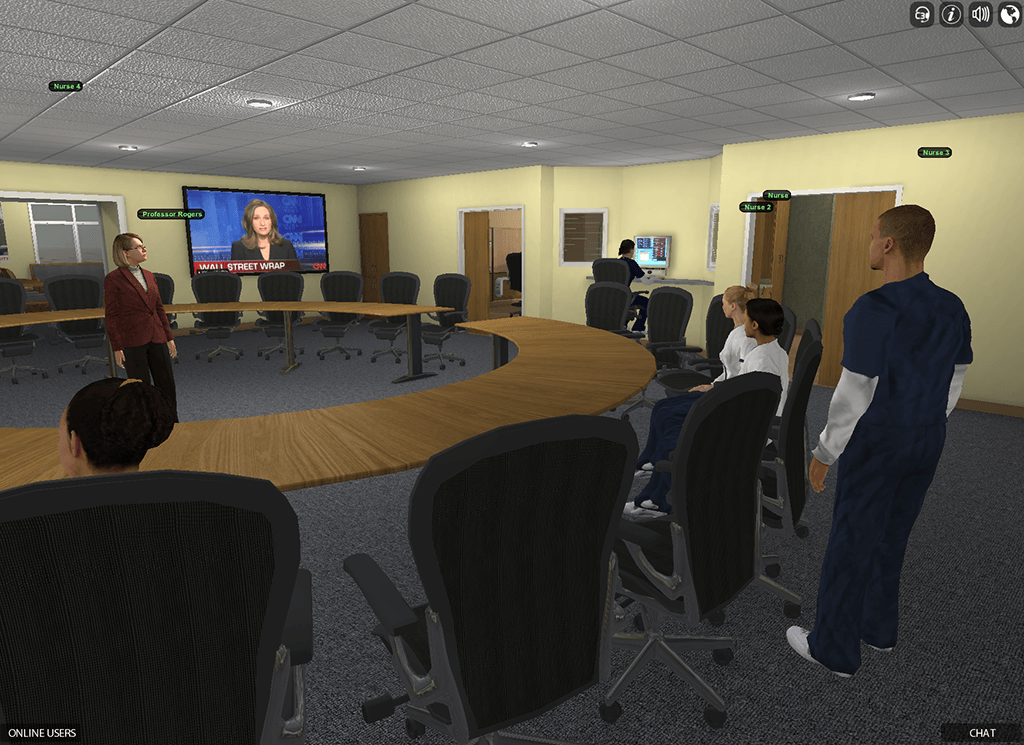

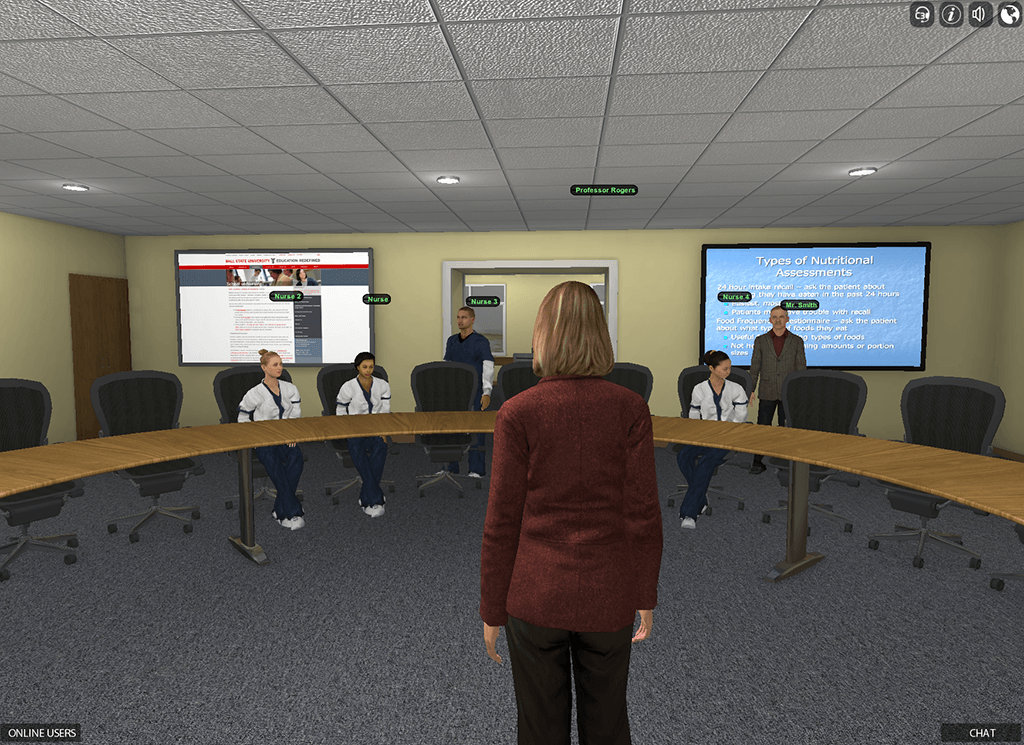

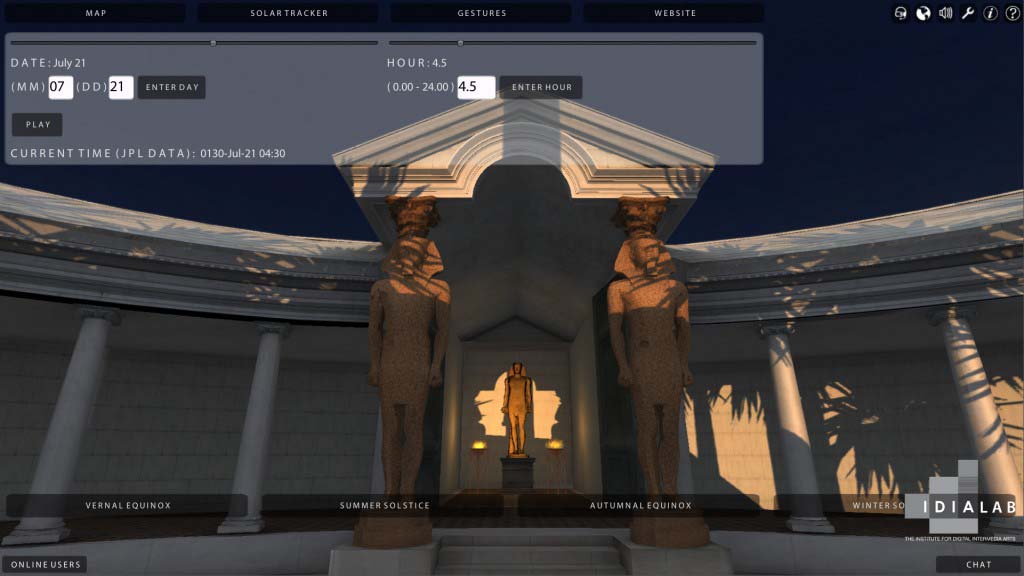

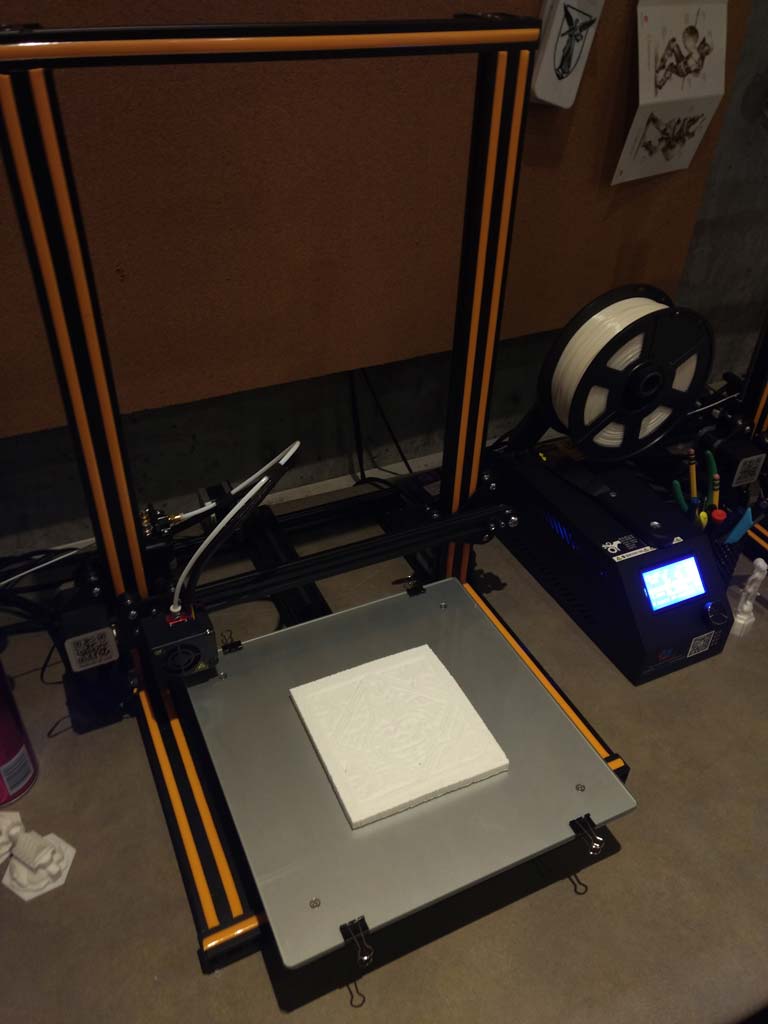

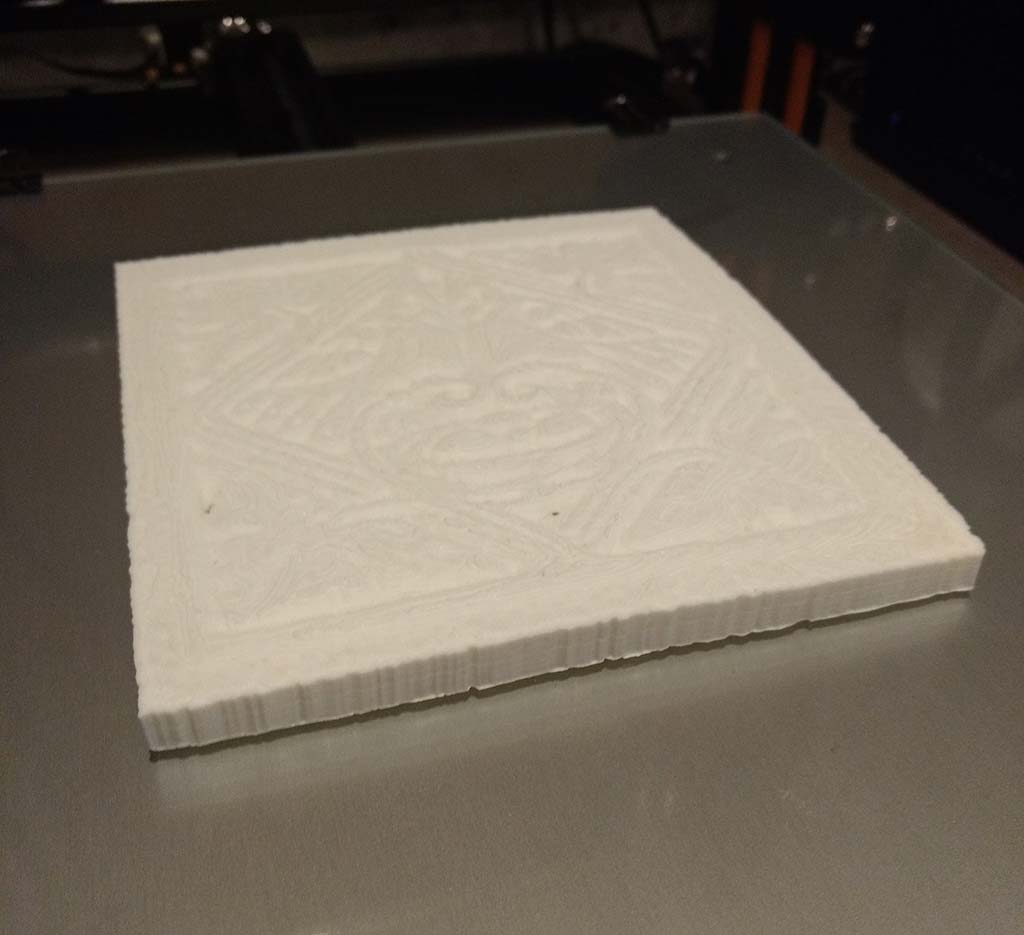

The project of virtually interpreting a simulation on the scope and scale of Hadrian’s Villa was a daunting one – engaging layers of scholarly, technical and pedagogical challenges. The technical challenges were many – foremost to leverage the game engine of Unity 3D to become an effective multi-user avatar-based virtual world. An important factor was to create an environment that was straightforward and accessible via standard web browsers on both Mac and Windows and selected Unity 3D as the starting point for developing the platorm. We required specific back-end administration tools to handle the accounts and server side aspects of the project – for this we relied on Smart Fox Server as it manages Unity 3D quite well. Our team took an approach that bridged and integrated disparate technologies, creating a robust virtual world platform to immersively augment both instructional and PBL processes. VW features available to the learning community included text based communication, a live map showing current visitor positions, map based teleportation, managed voice channel, user selected avatar gestures, online users, paradata, photographs of the extant site, plan views, and integrated web links.

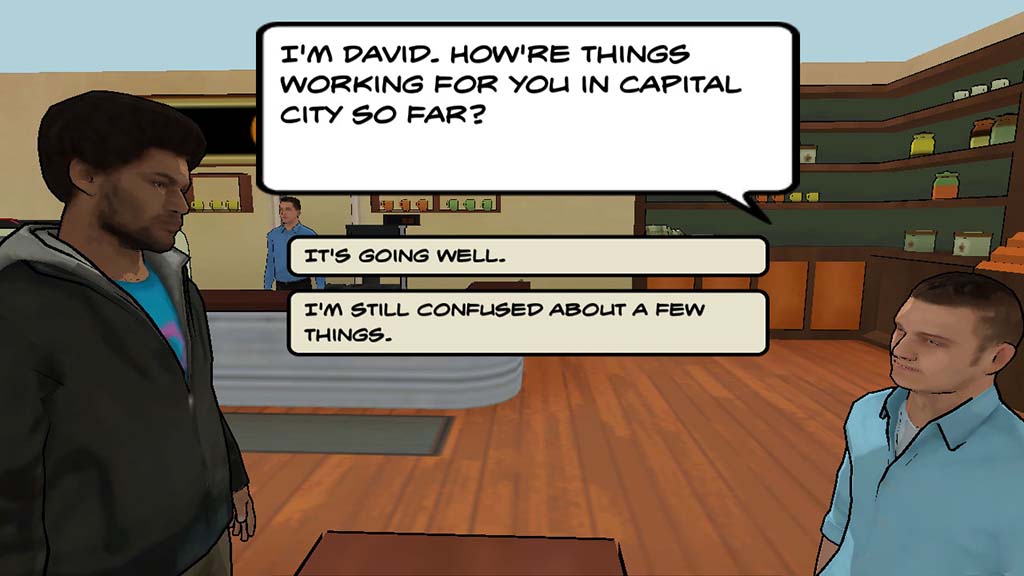

Key to the project was a varied system of avatars representing the imperial court, freemen, senators, scholars, soldiers, and slaves to the emperor. The avatar system provided several important functions testing recent scholarly interpretations of circulation throughout the villa and the use of various spaces for typical court activities – meals, imperial audiences, bathing, worship, etc. Upon entering the simulation, the choice of avatar would predicate how one’s social standing within the role-play of the world.

A gesture system was created via motion capture providing each user with a unique set of actions and gestural responses to engage social interactions – including greetings, bowing and gestures specific to rank and class. Communication was also a critical element in the modes of problem based learning engaged by the participants in the simulation. Specific technologies provided varied abilities such as public chat, private instant messaging and live multi-user voice channels.

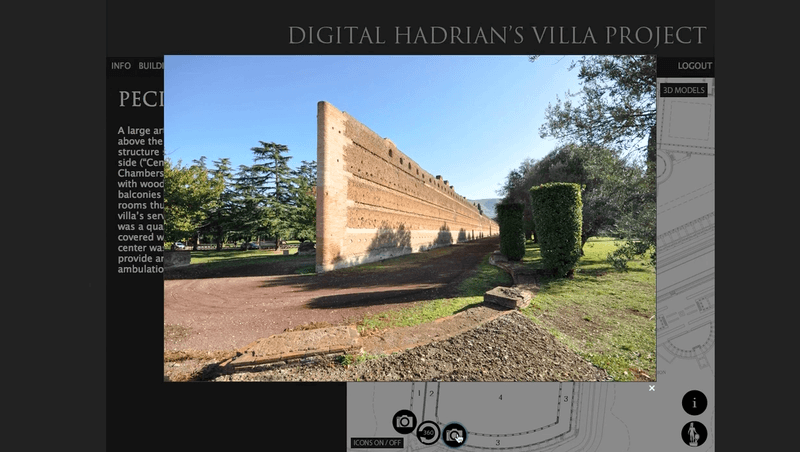

A companion website was co-developed and integrated into the VW environment providing learners with visual assets such as photographs and panoramas of the current site, site plans, elevations, and video interviews with Villa scholars. We also developed three-dimensional turntables of the interpreted and reconstructed models, overview information on each of the major Villa features, bibliography and an expansive database of art attributed to the Villa site. This information can be directly accessed by learners directly from within the virtual world. The development team integrated the notion of paradata, introduced by the London Charter – making instantly transparent the scholarship and all underlying elements of the 3D model (from terrain to buildings, furnishing, costumes, and human behavior).

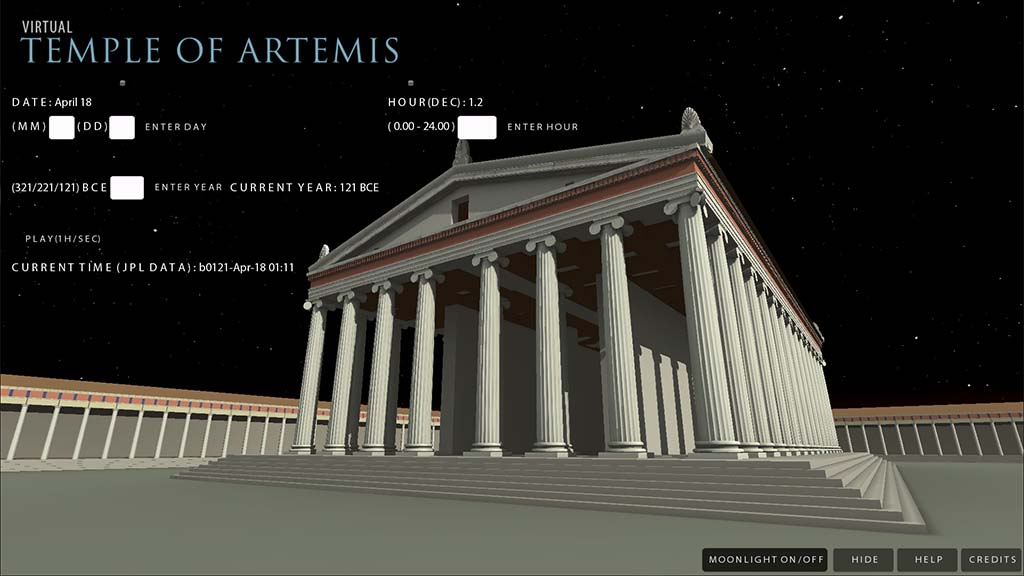

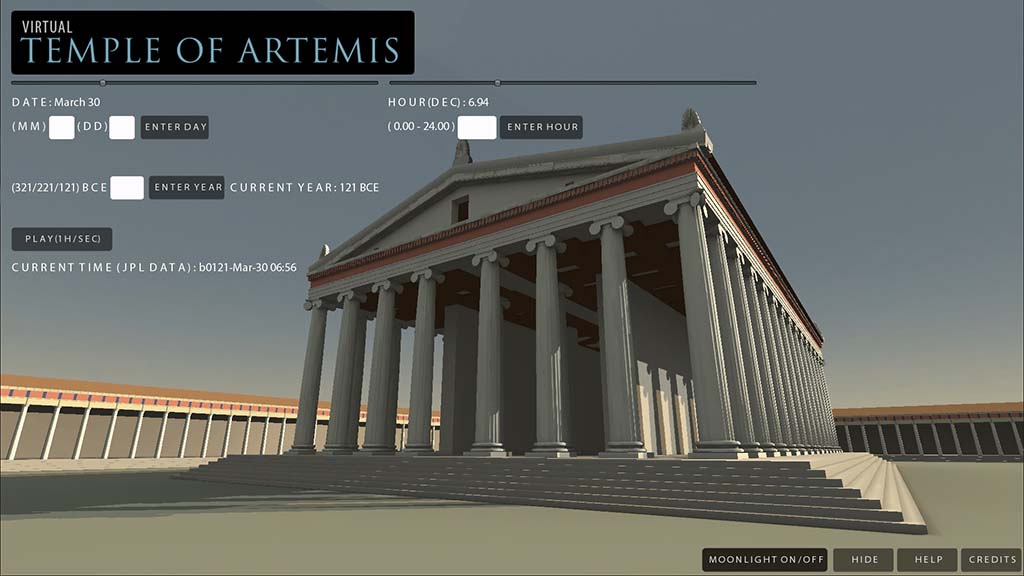

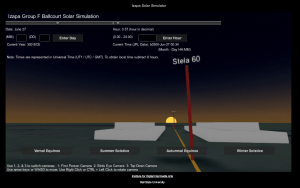

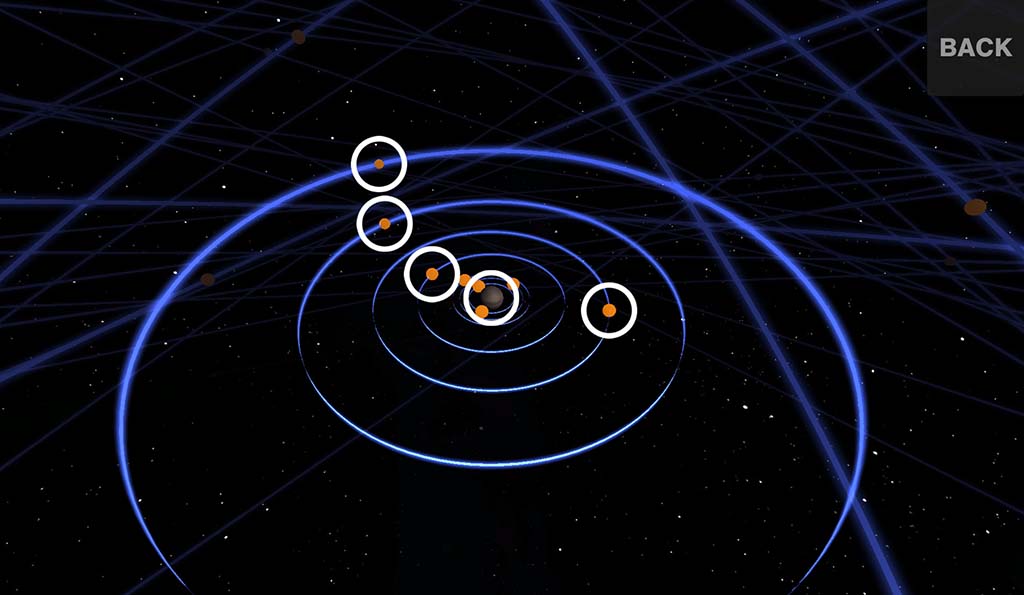

In support of new research theme on celestial alignments by consultants De Franceschini and Veneziano, a major goal for the project was to develop an accurate simulation for the position of the sun. The solar tracking, or virtual heliodon that we created as a response to this research, was envisioned as a simulation that would a bridge between the virtual environment and coordinates from an external database calculating solar positions. After investigating existing tools we decided to employ the Horizons database that was created by NASA’s Jet Propulsion Laboratory as an on-line solar system data computation service – tracking celestial bodies in ephemerides from 9999 BCE to 9999 CE. In implementing solar tracking for the Villa project in instances were we where we wanted to investigate potential significant solar alignments, we entered the latitude, longitude and altitudes of specific buildings from the Tivoli site to poll the Horizons data for the year 130 CE. The user was able to change the date, time of day, and quickly play the sun from specific moments via the user interface. The system was co-related to both the Julian and Gregorian calendars and contained presets for the vernal and autumnal equinoxes as well at the summer and winter solstices.

These tools allowed for the rapid discovery of potential alignment that might bear further investigation. The solar feature allows one to proceed empirically, in effect turning the clock back to 130 CE and running experiments in which the days and hours of the year are sped up by orders of magnitude so that one can in a very short time find candidate alignments not yet hypothesized by scholars working in the traditional way of Mangurian-Ray.

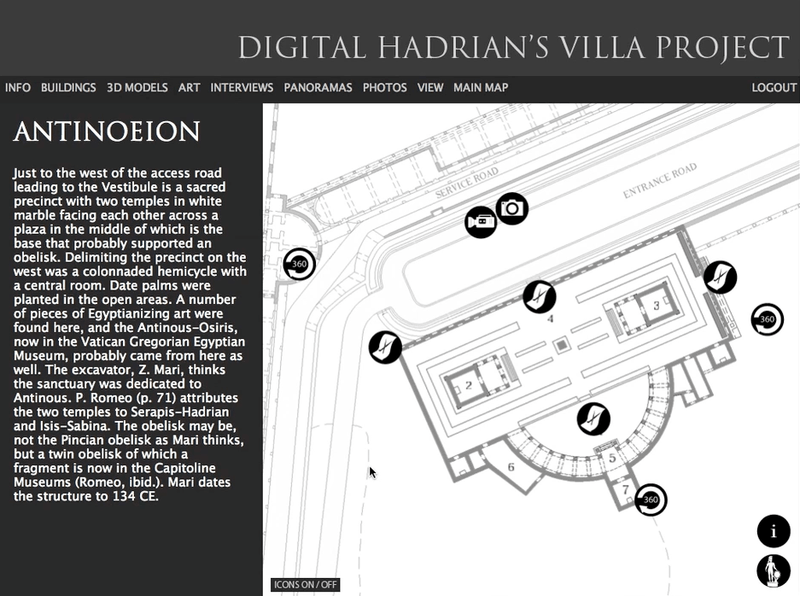

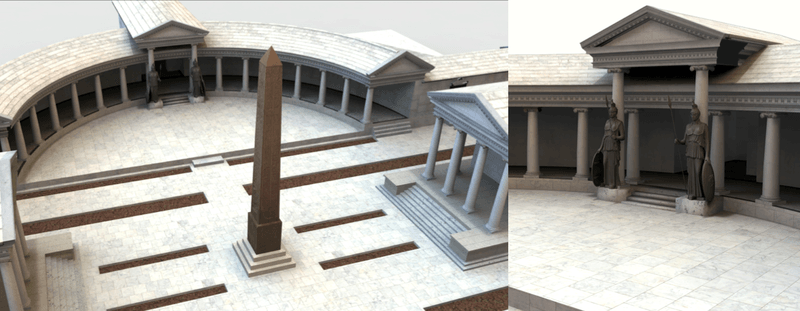

As developers, our goal was to create the solar tool and let students and scholars use it to undertake their own empirical research. Our team was not intending to engage in this research ourselves, yet in the process of working within the environment daily we quickly began to notice curious solar phenomena. In a bit of empirical study of the very first component of the site we installed in the simulation, the Antinoeion – or newly-discovered Temple of the Divine Antinous, we noticed an alignment of potential interest. The most likely alignment seemed at first glance to be along the main axis running from the entrance, through the obelisk in the central plaza to the statue niche at the end of the axis. We ran the days and hours of the year and found that the sun and shadow of the obelisk align at sunrise on July 20. We consulted with our expert on the Egyptian calendar in the Roman period, Professor Christian Leitz of the University of Tuebingen–and he confirmed that this date has religious significance. It is, in fact, the date of the Egyptian New Year, as the Romans of Hadrian’s age clearly knew (cf. the Roman writer Censorinus, who states that the Egyptian New Year’s Day fell on July 20 in the Julian Calendar in 139 CE, which was a heliacal rising of Sirius in Egypt).

In the process of developing and subsequently utilizing the simulation tools we created for astro-archeological research, our conclusions have been that virtual world technologies can indeed take the inquiry for significant built-celestial alignments to a new level of insight.

Bibliography

Chiappetta, F. 2008. I percorsi antichi di Villa Adriana (Rome).

De Franceschini, M. and G. Veneziano, 2011. Villa Adriana. Architettura celeste. Gli secreti degli solstizi (Rome).

Hannah, R. 2008. Time in Antiquity (London).

Hannah, R. 2011. “The Role of the Sun in the Pantheon’s Design and Meaning,”Numen 58: 486-513.

Kandel, E. 2007. In Search of Memory: The Emergency of a New Science of Mind(W. W. Norton, New York). Kindler edition.

Lehmann, K. “The Dome of Heaven,” Art Bulletin 27: 1-27.

Lugli, G. 1940. “La Roccabruna di Villa Adriana,” Palladio, 4: 257-274

Mangurian, R. and M.A. Ray. 2008. “Re-drawing Hadrian’s Villa,” Yale Architectural Journal, 113-116.

Mari, Z. 2010. “Villa Adriana. Recenti scoperte e stato della ricerca,” Ephemeris Napocensis 20: 7-37.

Ytterberg, M. 2005. “The Perambulations of Hadrian. A Walk through Hadrian’s Villa,” Ph.D. dissertation, University of Pennsylvania.

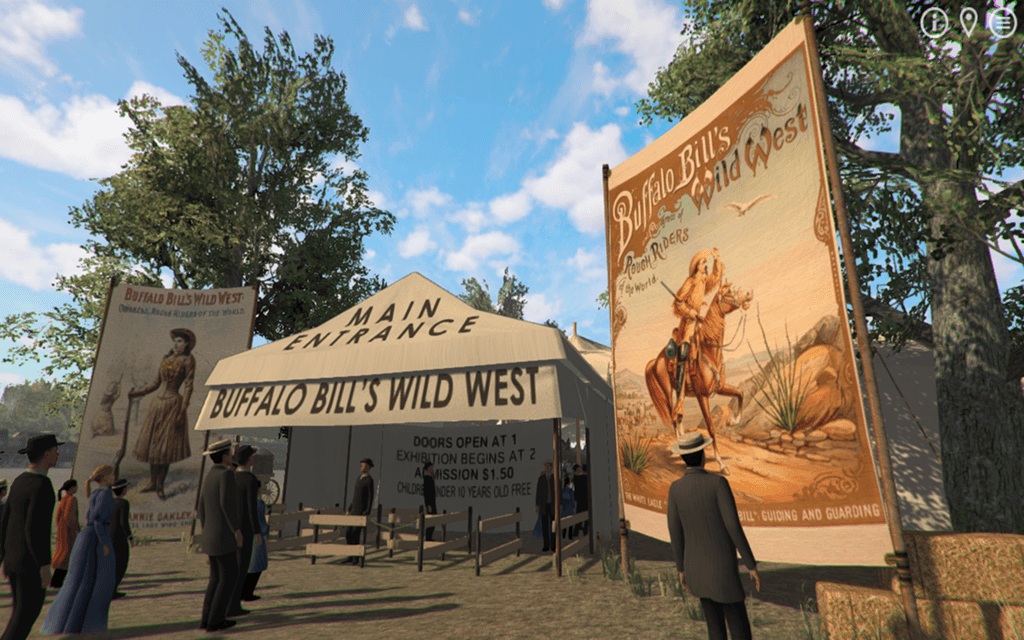

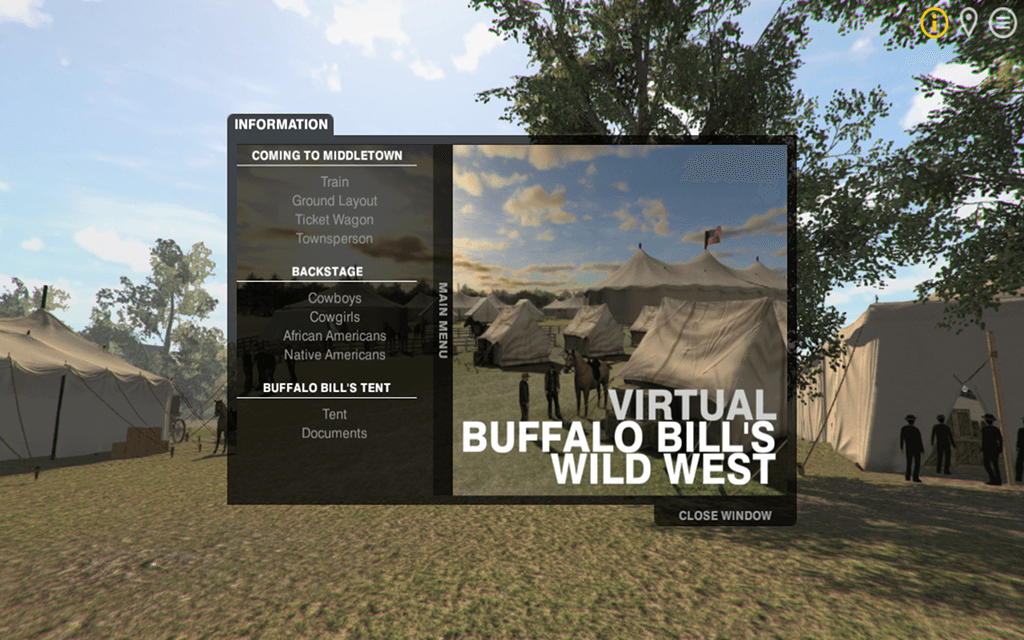

Born in 1846, William F. Cody rode for the Pony Express, served as a military scout and earned his moniker “Buffalo Bill” while hunting the animals for the Kansas Pacific Railroad work crews. Beginning in 1883, he became one of the world’s best showmen with the launch of Buffalo Bill’s Wild West, which was staged for 30 years, touring America and Europe multiple times.

Born in 1846, William F. Cody rode for the Pony Express, served as a military scout and earned his moniker “Buffalo Bill” while hunting the animals for the Kansas Pacific Railroad work crews. Beginning in 1883, he became one of the world’s best showmen with the launch of Buffalo Bill’s Wild West, which was staged for 30 years, touring America and Europe multiple times.